Zeek - 高度定制化的 DNS事件 + 文件还原

背景

本地环境中部署了2台NTA(Suricata)接收内网12台DNS服务器的流量,用于发现DNS请求中存在的安全问题。近一段时间发现2台NTA服务器运行10小时左右就会自动重启Suricata进程,看了一下日志大概意思是说内存不足,需要强制重启释放内存。说起这个问题当时也是花了一些时间去定位。首先DNS这种小包在我这平均流量也就25Mbps,所以大概率不是因为网卡流量过大而导致的。继续定位,由于我们这各个应用服务器会通过内网域名的形式进行接口调用,所以DNS请求量很大。Kibana上看了一下目前

dns_type: query事件的数据量320,000,000/天 ~ 350,000,000/天(这仅仅是dns_type: query数据量,dns_type: answer数据量也超级大)。由于Suricata不能在数据输出之前对指定域名进行过滤,这一点确实很傻,必须吐槽。当时的规避做法就是只保留dns_type: query事件,既保证了Suricata的正常运行也暂时满足了需求。近一段时间网站的某个上传接口被上传了包含恶意Payload的jpg与png。虽然Suricata有检测到,但也延伸了新的需求,如何判断文件是否上传成功以及文件还原与提取HASH。虽然这两点Suricata自身都可以做,但是有一个弊端不得不说。例如Suricata只要开启

file_info就会对所有支持文件还原的协议进行HASH提取。由于我们是电商,外部访问的数据量会很大,Suricata默认不支持过滤,针对用户访问的HTML网页这种也会被计算一个HASH,这个量就非常的恐怖了。

总结:针对以上2个问题,我需要的是一个更加灵活的NTA框架,下面请来本次主角 - Zeek。

需求

- 过滤内部DNS域名,只保留外部DNS域名的请求与响应数据;

- 更灵活的文件还原与提取HASH;

实现

1. 过滤本地DNS请求

DNS脚本

dns-filter_external.zeek

1 | redef Site::local_zones = {"canon88.github.io", "baidu.com", "google.com"}; |

脚本简述:

通过

Site::local_zones定义一个内部的域名,这些域名默认都是我们需要过滤掉的。例如,在我的需求中,多为内网的域名;- 优化性能, 只开启DNS流量解析。由于这2台NTA只负责解析DNS流量,为了保留针对域名特征检测的能力,我选择了Suricata与Zeek共存,当然Zeek也可以做特征检测,只是我懒。。。通过

Analyzer::enable_analyzer(Analyzer::ANALYZER_DNS);指定了只对DNS流量进行分析; - 过滤日志并输出;

- 优化性能, 只开启DNS流量解析。由于这2台NTA只负责解析DNS流量,为了保留针对域名特征检测的能力,我选择了Suricata与Zeek共存,当然Zeek也可以做特征检测,只是我懒。。。通过

日志样例:

1 | { |

总结:

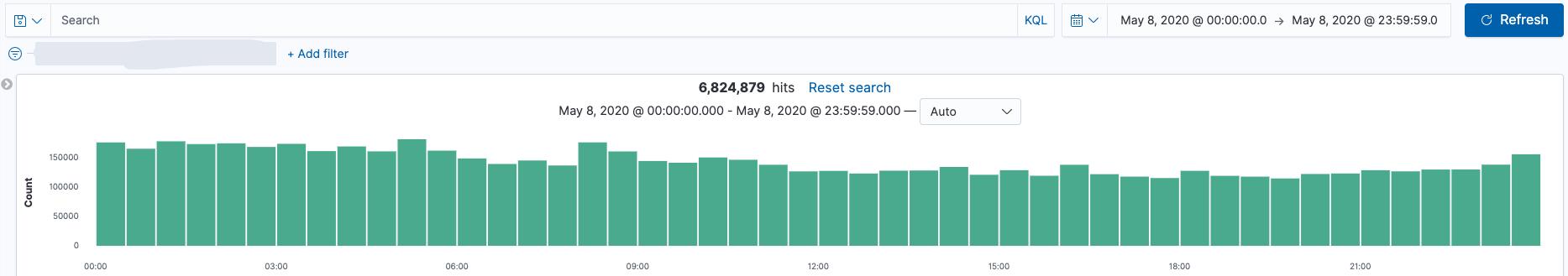

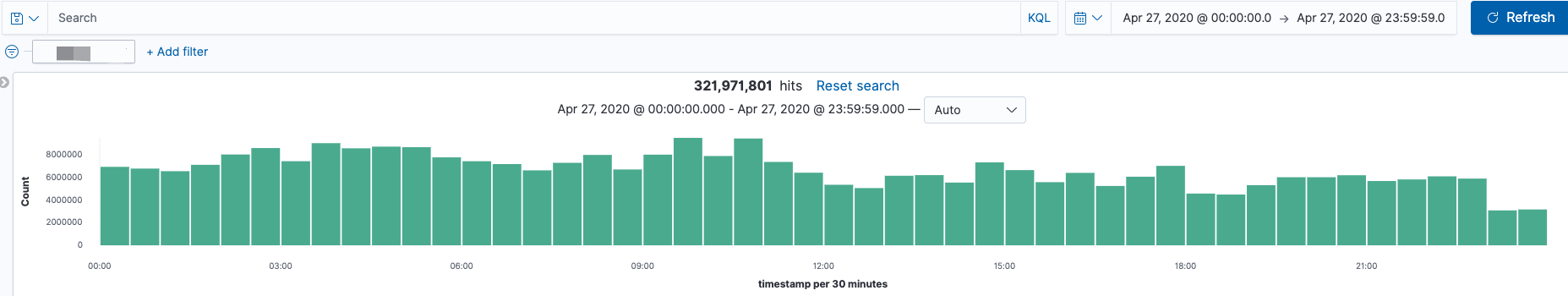

当采用Zeek过滤了DNS请求后,现在每天的DNS数据量 6,300,000/天 ~ 6,800,000/天(query + answer),对比之前的数据量320,000,000/天 ~ 350,000,000/天(query)。数据量减少很明显,同时也减少了后端ES存储的压力。

- Zeek DNS (query + answer)

- Suricata DNS (query)

2. 更灵活的文件还原与提取文件HASH

文件还原脚本

file_extraction.zeek

Demo脚本,语法并不是很优雅,切勿纠结。

1 | @load base/frameworks/files/main |

脚本简述:

1. 支持针对指定hostname,method,url,文件头进行hash的提取以及文件还原;

2. 默认文件还原按照年月日进行数据的存储,保存名字按照MD5名称命名;

3. 丰富化了文件还原的日志,增加HTTP相关字段;

日志样例

1 | { |

文件还原

这是其中一个包含恶意Payload还原出的图片样例

1 | $ ll /data/logs/zeek/extracted_files/ |

总结:

通过Zeek脚本扩展后,可以“随意所欲”的获取各种类型文件的Hash以及定制化的进行文件还原。

头脑风暴

当获取文件上传的Hash之后,可以尝试扩展出以下2个安全事件:

判断文件是否上传成功。

通常第一时间会需要定位文件是否上传成功,若上传成功需要进行相关的事件输出,这个时候我们可以通过采用HIDS进行文件落地事件的关联。

关联杀毒引擎/威胁情报。

将第一个关联好的事件进行Hash的碰撞,最常见的是将HASH送到VT或威胁情报。

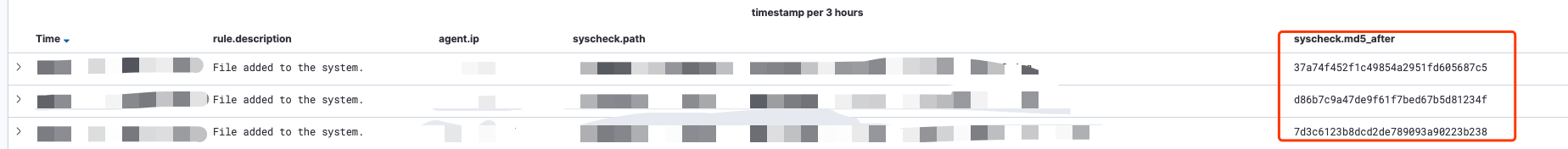

这里以Wazuh事件为例,将Zeek的文件还原事件与Wazuh的新增文件事件进行关联,关联指标采用Hash。

a. Zeek事件

1 | { |

b. Wazuh 事件