安装-PF_RING

1 | # Installing from GIT |

1 | $ git clone --recursive https://github.com/zeek/zeek |

1 | # Installing from GIT |

1 | $ git clone --recursive https://github.com/zeek/zeek |

随着企业安全建设的不断完善,信息安全的工作也进入了Happy(苦逼)的运营阶段。谈起安全运营工作,自然避不开事件响应这个话题。对于安全事件响应而言,我们时常会需要进行跨部门的协作。并且在某些事件中,我们甚至需要进行持续的跟踪与排查。因此,在事件的响应过程中,对于每一个响应步骤的记录显得尤为重要。它可以帮助我们在事件解决后,将经验教训纳入其中,加强整体安全能力。另一方面从自动化的角度来说,我们也应该考虑如何将响应过程转换为可被复用的Playbook,用以快速应对攻击,从而缩短感染攻击到遏制攻击的时间。

下面来说说我这的痛点,或者也可以说是我们在运营过程中所需要解决的一些问题:

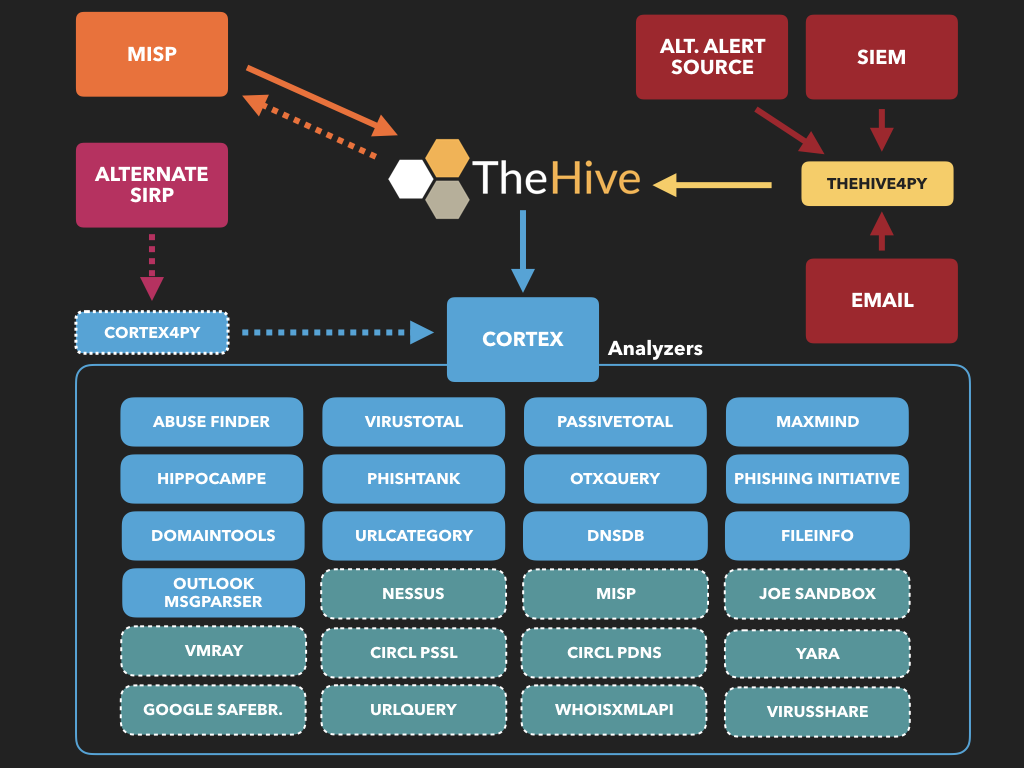

我最终选择了*TheHive* 安全事件响应平台来协助我进行日常的安全运营工作。TheHive不同于SIEM这类的产品,它主要对接的是需要被真实响应的事件。个人粗略汇总了一下它的特点:

由于篇幅的关系,这里主要介绍的是采用TheHive集群时需要调整的一些配置。至于如何安装TheHive,请参考:Step-by-Step guide。如果只是为了测试的话,可以直接用官网提供的Docker或者VM镜像。

根据官方文档介绍,TheHive集群涉及4个部分。以下将会分别说明当采用TheHive集群时,TheHive、Cortex、Cassandra、Minio需要做的调整。

我们将节点1视为主节点,通过编辑/etc/thehive/application.conf文件来配置akka组件,如下所示:

1 | ## Akka server |

集群配置

/etc/cassandra/cassandra.yaml1 | cluster_name: 'thp' |

/etc/cassandra/cassandra-topology.properties1 | $ rm -rf /etc/cassandra/cassandra-topology.properties |

启动服务

1 | $ service cassandra start |

1 | $ nodetool status |

初始化数据库

cassandra/cassandra)1 | $ cqlsh th01 -u cassandra |

1 | $ cqlsh <ip node X> -u cassandra |

thehive的KEYSPACE1 | cassandra@cqlsh> CREATE KEYSPACE thehive WITH replication = {'class': 'SimpleStrategy', 'replication_factor': '3' } AND durable_writes = 'true'; |

thehive,并授予thehive 权限(选择密码)1 | cassandra@cqlsh> CREATE ROLE thehive WITH LOGIN = true AND PASSWORD = 'HelloWorld'; |

TheHive 相关配置

由于最新的TheHive集群需要配合ElasticSearch进行索引,因此需要同步更新如下配置:

/etc/thehive/application.conf配置1 | db.janusgraph { |

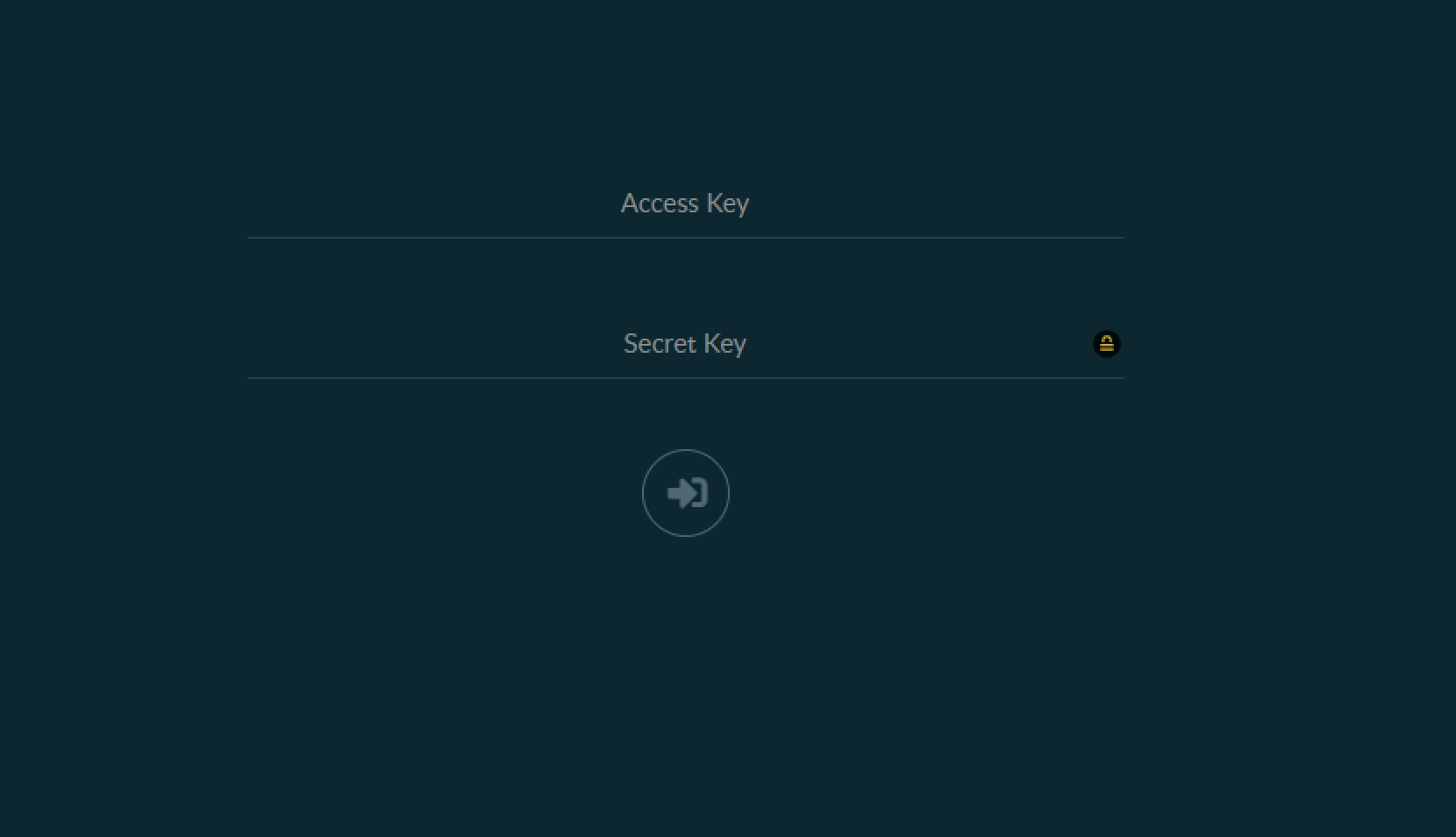

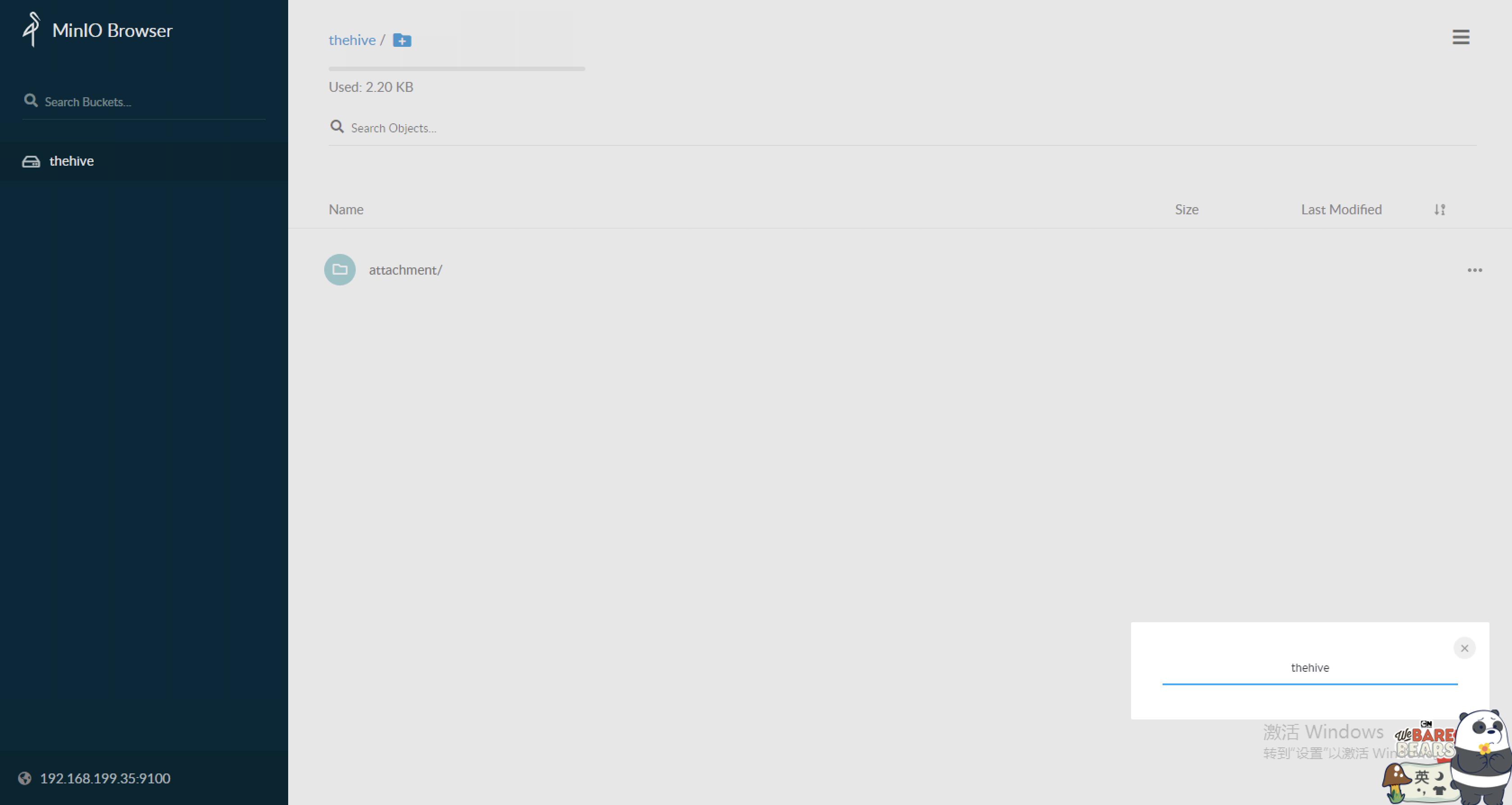

由于我的文件存储是采用了Minio,所以这里需要配置一下。其实更简单的方式,你可以考虑使用S3。

1 | $ mkdir /opt/minio |

1 | $ adduser minio |

创建数据卷

在每台服务器上至少创建2个数据卷

1 | $ mkdir -p /srv/minio/{1,2} |

1 | $ vim /etc/hosts |

1 | $ cd /opt/minio |

配置

/opt/minio/etc/minio.conf1 | MINIO_OPTS="server --address :9100 http://minio{1...3}/srv/minio/{1...2}" |

/usr/lib/systemd/system/minio.service1 | [Unit] |

启动

1 | $ systemctl daemon-reload |

注:这里记得确认一下权限的问题,权限不对的话会导致进程起不来。

创建bucket

修改TheHive配置文件 /etc/thehive/application.conf

1 | ## Attachment storage configuration |

修改 Cortex 配置文件 /etc/cortex/application.conf

这里注意,官方默认的配置文件有个小问题。当采用Elastic认证的时候需要将username修改为user,否则会报错。

1 | play.http.secret.key="QZUm2UgZYXF6axC" |

由于在Cortex 3中实现了对dockerized分析器的支持,安装过程已经被大大简化。因此,我们不必纠结于安装插件时的Python或其他库依赖项这种头疼的问题。

1 | # Ubuntu 18.04 |

1 | $ usermod -a -G docker cortex |

/etc/cortex/application.conf,启用analyzers.json1 | ## ANALYZERS |

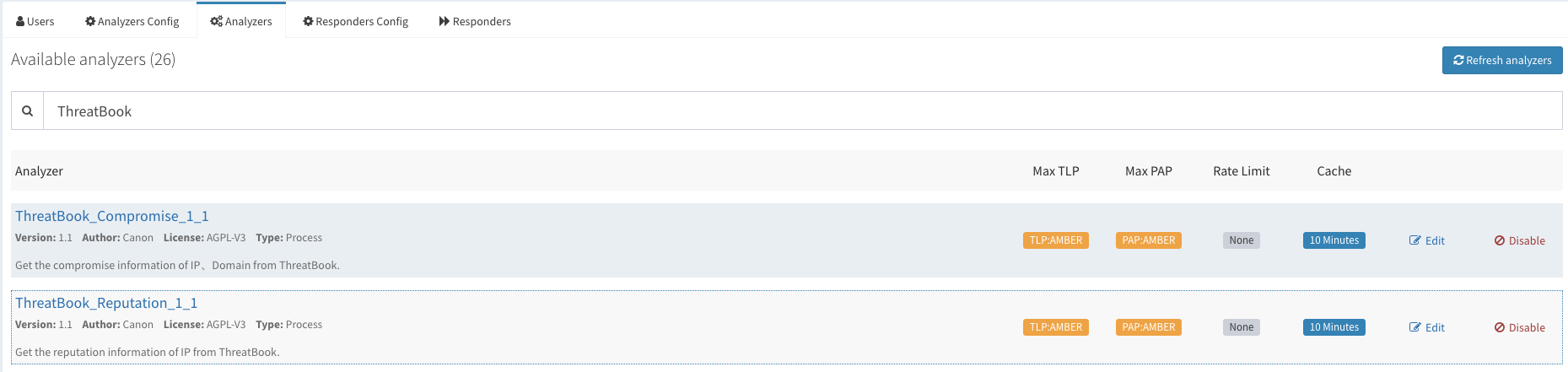

前面有说到Cortex组件默认已经集成了丰富的Analyzers与Responses插件,便于运营人员快速的对安全事件进行分析与响应。在实际使用过程中根据需求场景的不同,我们仍需要进行一些插件的定制化。如何创建插件,官网有很详细的文档介绍,请参考:How to Write and Submit an Analyzer。以下附上了部分新增的插件代码:

好了,废话少说,放“码”过来!!!

由于我们已经购买了商业(微歩在线)威胁情报,所以我们也和TheHive进行了整合。

1 | #!/usr/bin/env python3 |

1 | #!/usr/bin/env python3 |

1 | { |

1 | { |

1 | #!/usr/bin/env python3 |

1 | #!/usr/bin/env python3 |

1 | { |

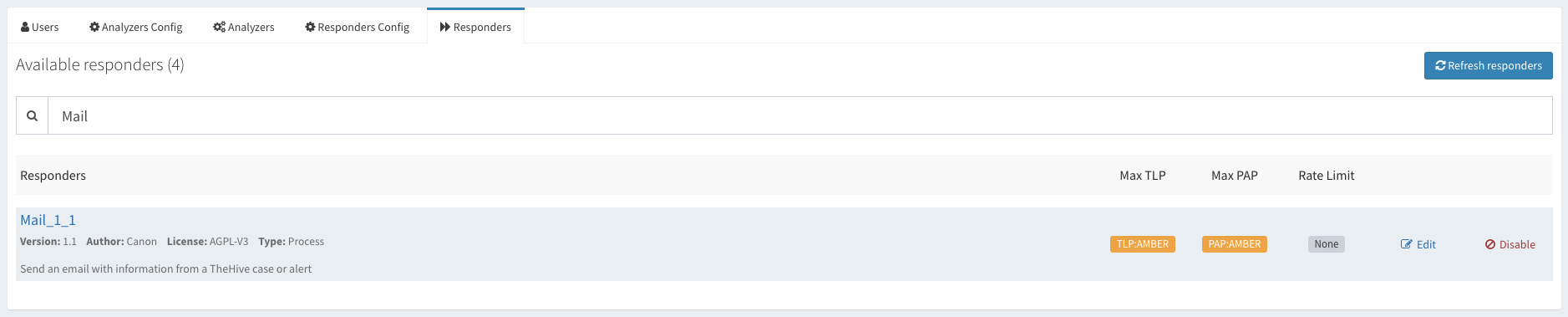

Cortex默认有一个插件(Mailer)负责发送邮件。使用了一下发现比较“坑”,首先不支持对多个收件人的发送,且当选择从Observables中发送邮件时,收件人竟然是mail类型的IoC。。。 WTF!别问我怎么知道的,它源码里就是这么写的。。。所以,自己动手丰衣足食!

主要功能:

1 | #!/usr/bin/env python3 |

1 | { |

其实默认TheHive是推荐与MISP进行对接实现情报的feed。由于我们自建了威胁情报库,所以写了一个Responders插件,帮助在分析时提交IoC情报。这边代码就不上了。给出一个提交的用例:

1 | { |

/etc/cortex/Cortex-Analyzers/analyzers/etc/cortex/Cortex-Analyzers/responders1 | $ ll /etc/cortex/Cortex-Analyzers/analyzers |

修改配置文件/etc/cortex/application.conf

建议大家将新增的插件与官方的插件区别开,这样后期也便于维护。

1 | ## ANALYZERS |

Analyzers

Responders

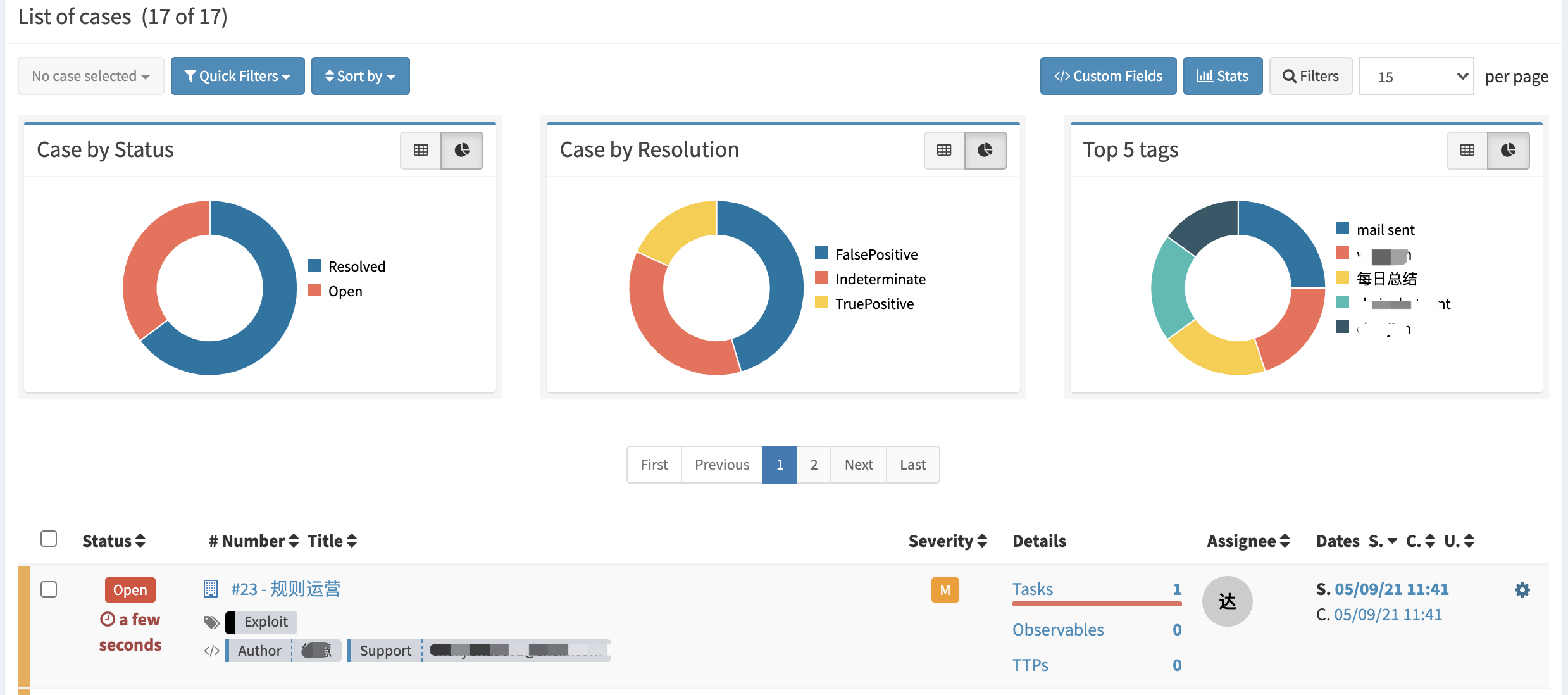

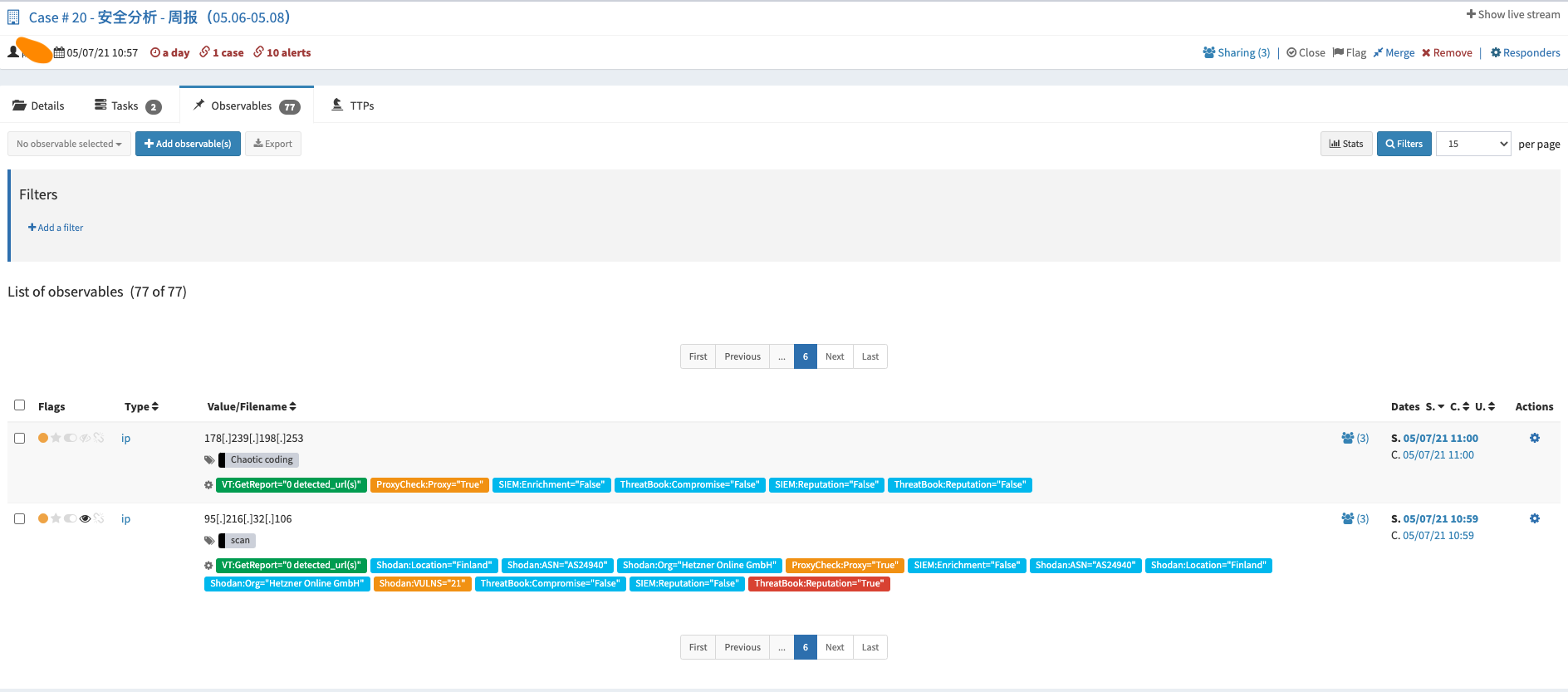

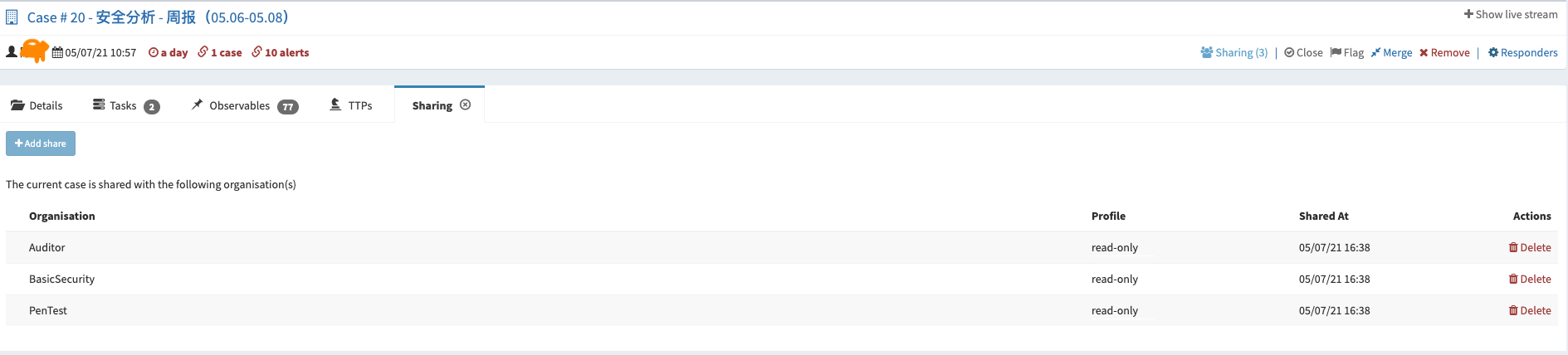

下面来说一下我们都用TheHive做了哪些,刚开始使用场景其实并不多,还需要后期的摸索。

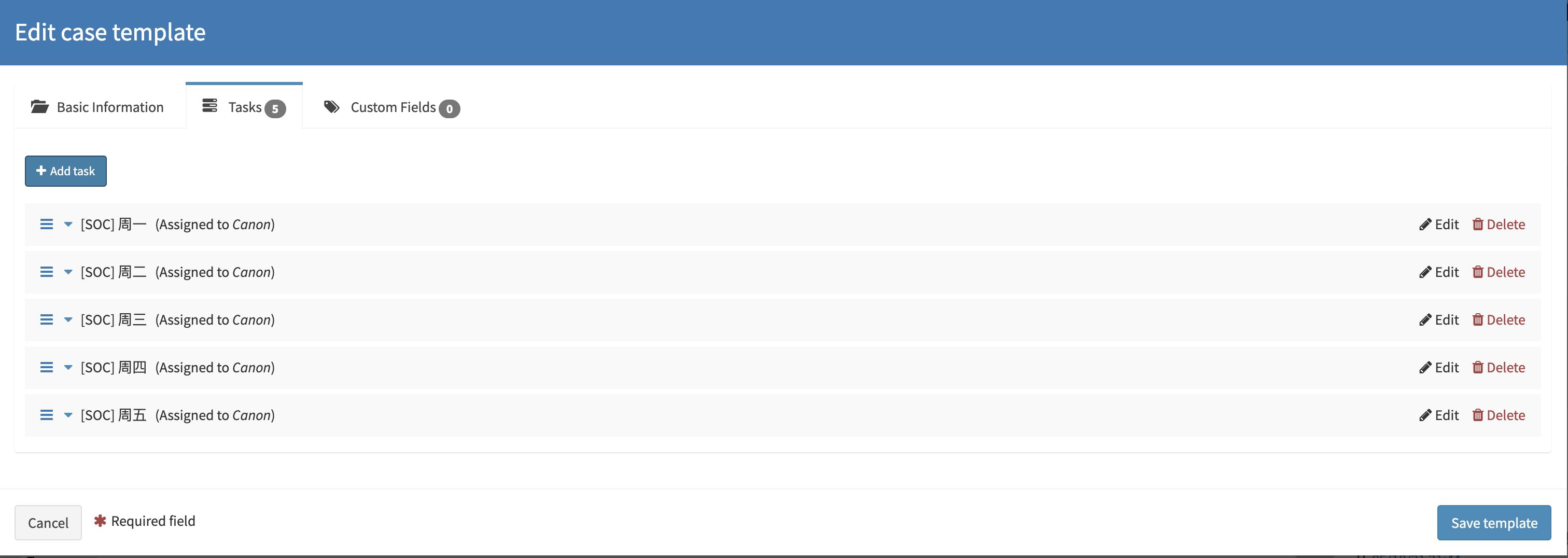

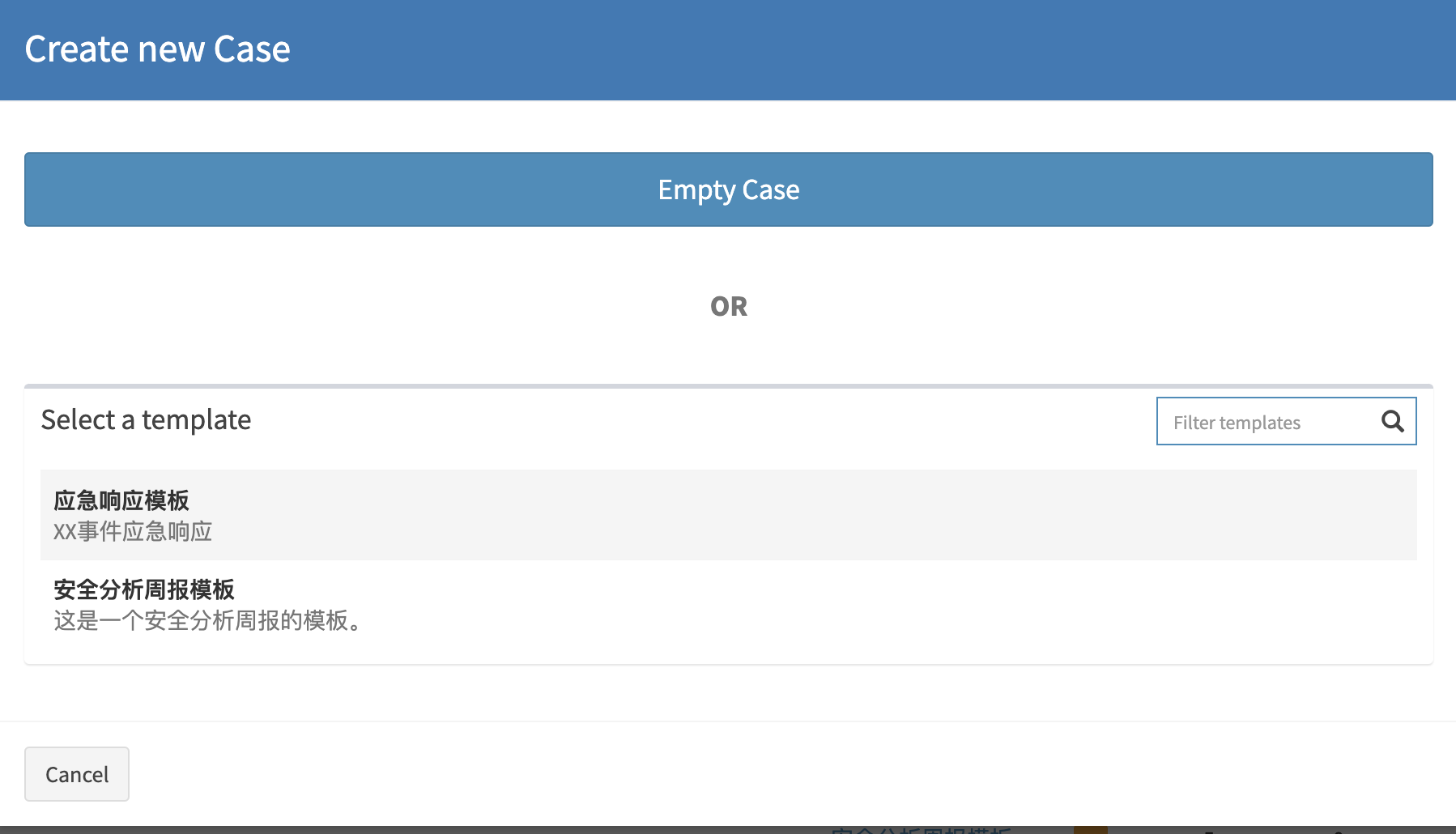

提前创建好模板,例如:按照Playbook的形式提前创建好。便于后期快速引用

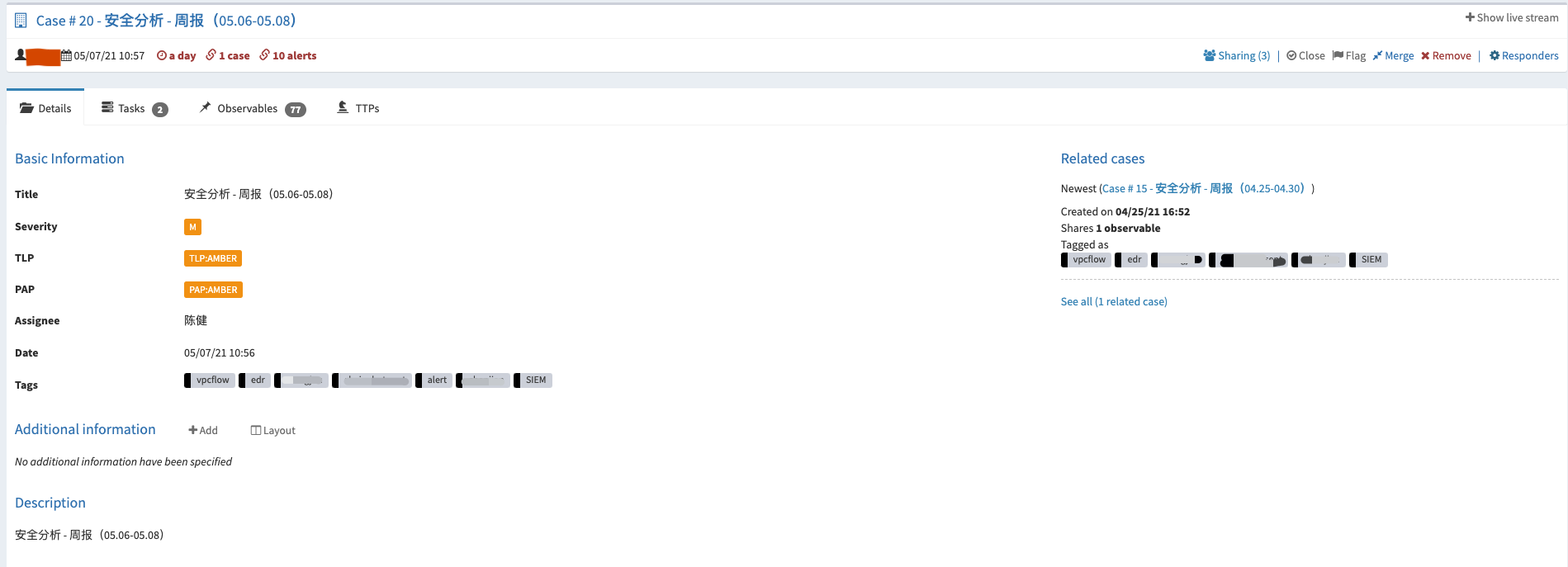

分析周报模板

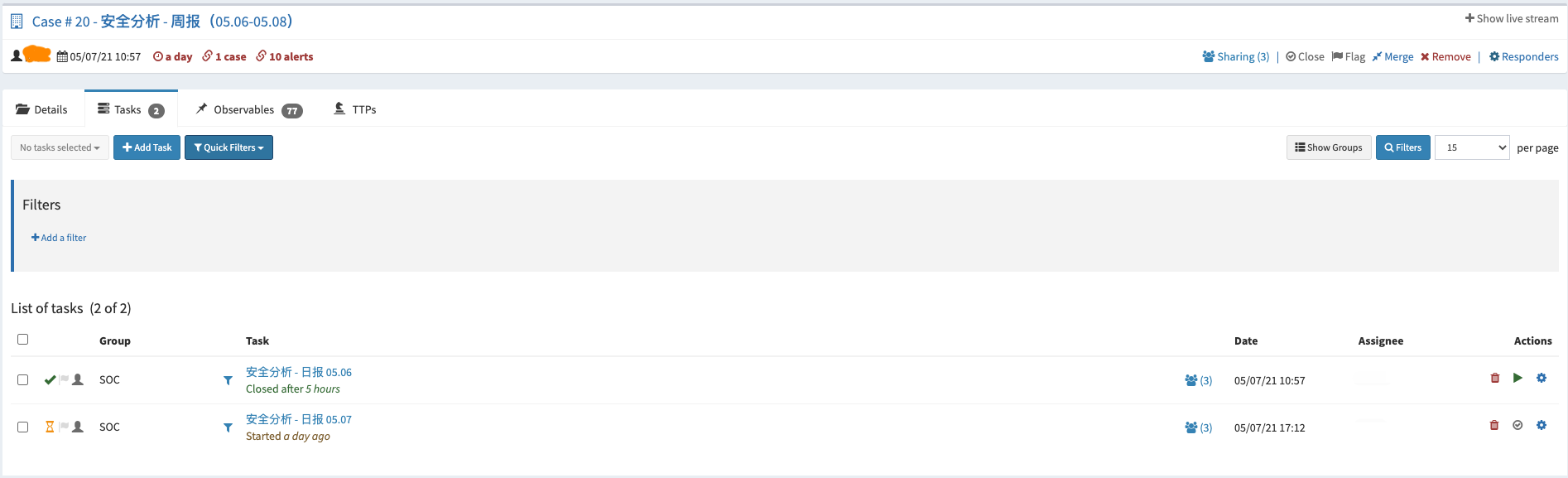

按照周为单位创建Case,以天为单位创建Task。

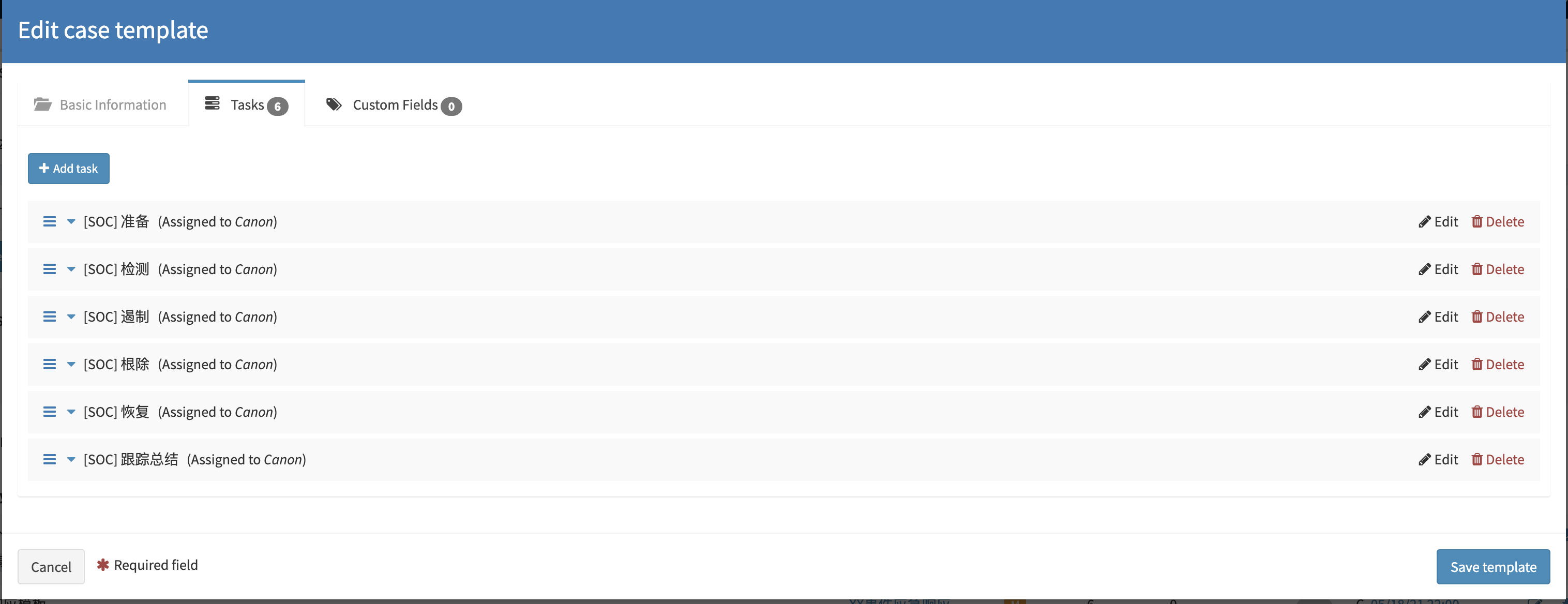

应急响应模板

可以参照应急响应阶段来创建

引用模板

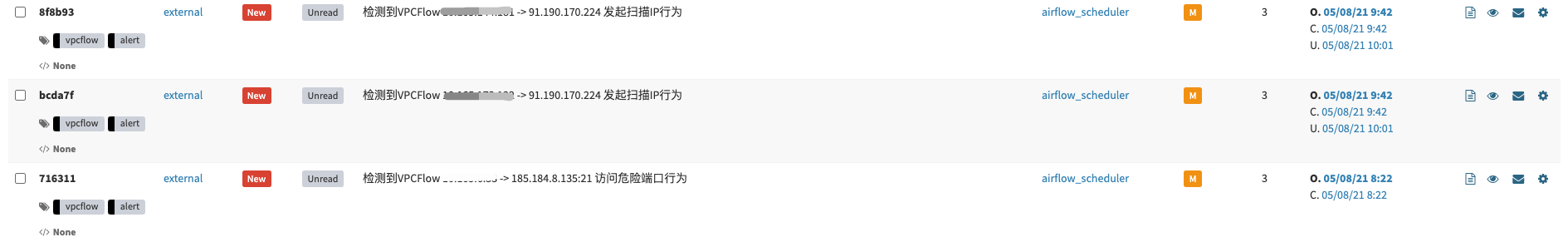

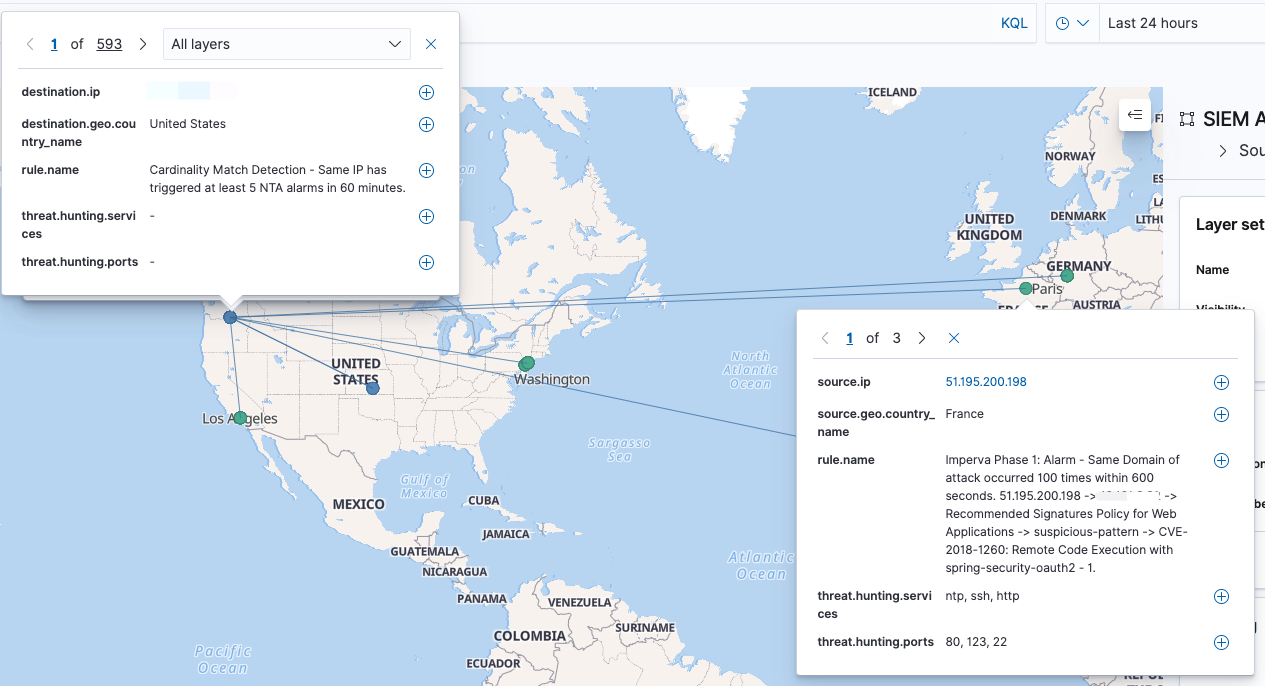

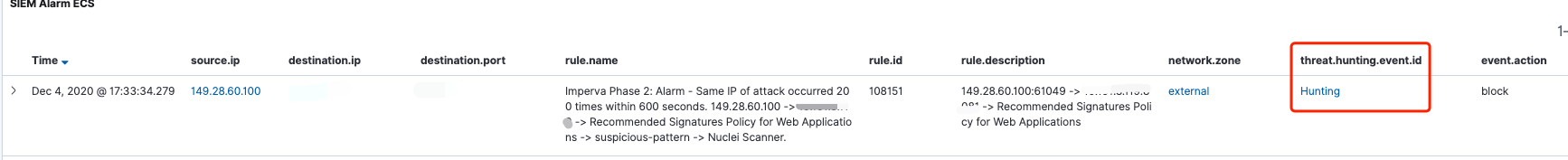

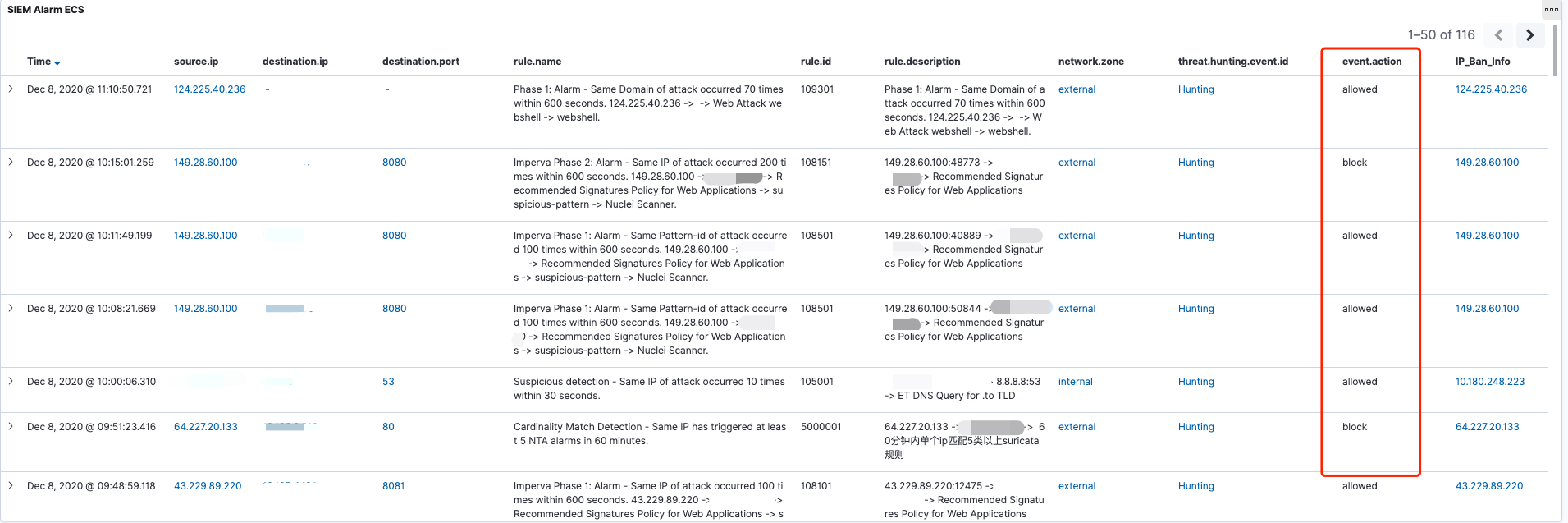

事件运营:SIEM(Alarm) -> TheHive(Alert)

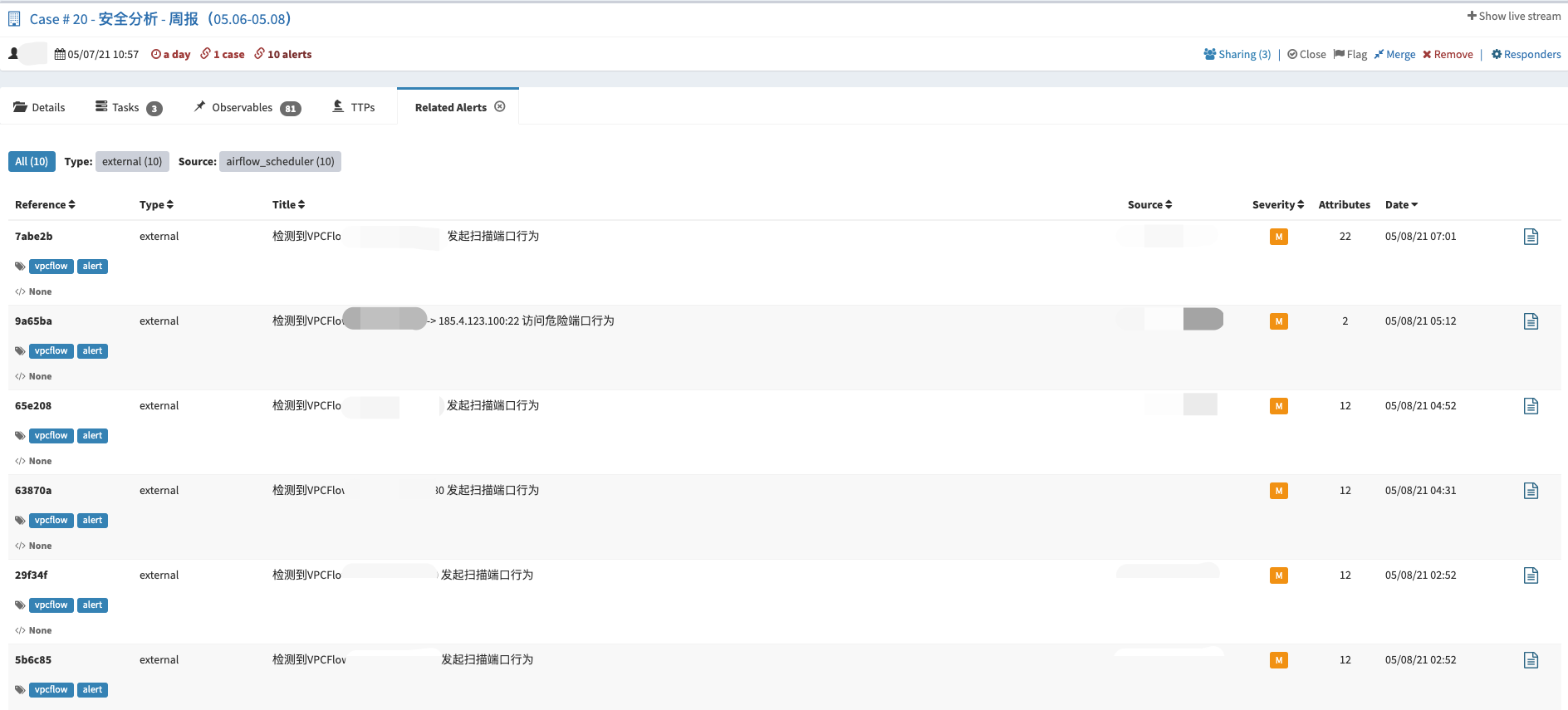

TheHive与SIEM做了对接,主要将两种类型的告警自动化的推送到了TheHive上。

第一种:需要研判的安全事件。例如:基于内->外的NetFlow告警事件(异常端口访问,周期性请求等等)、敏感信息泄漏告警事件(黑客论坛监控、GitHub监控)。通常这类事件需要进行二次确认的,所以会选择通过TheHive来记录整个事件的处理过程。

第二种:需要重点关注的安全事件。例如:EDR上的告警事件,命中C2指标的情报告警,通常这类事件需要第一时间去响应。

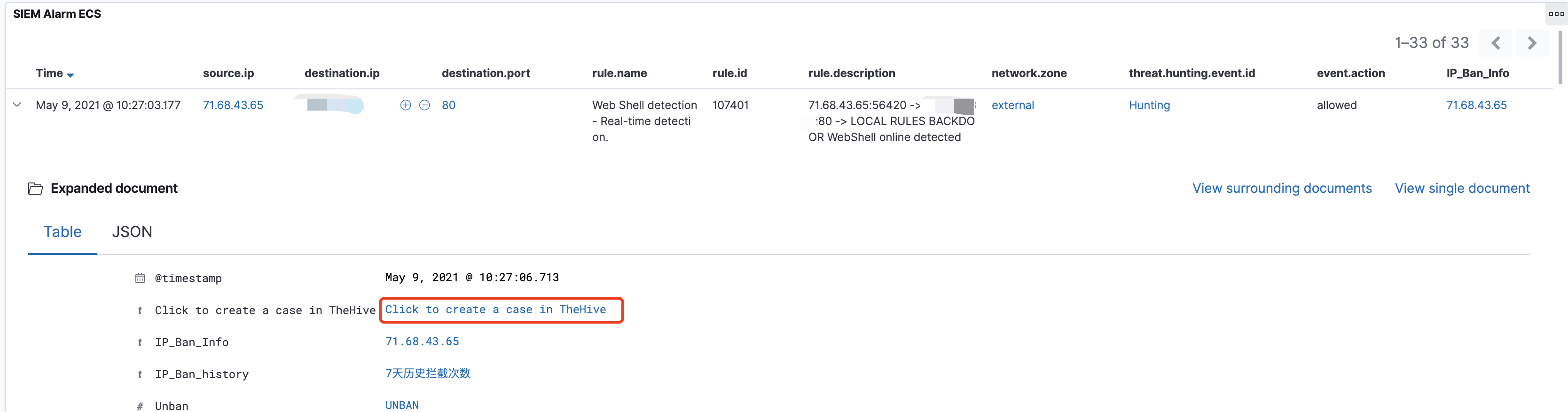

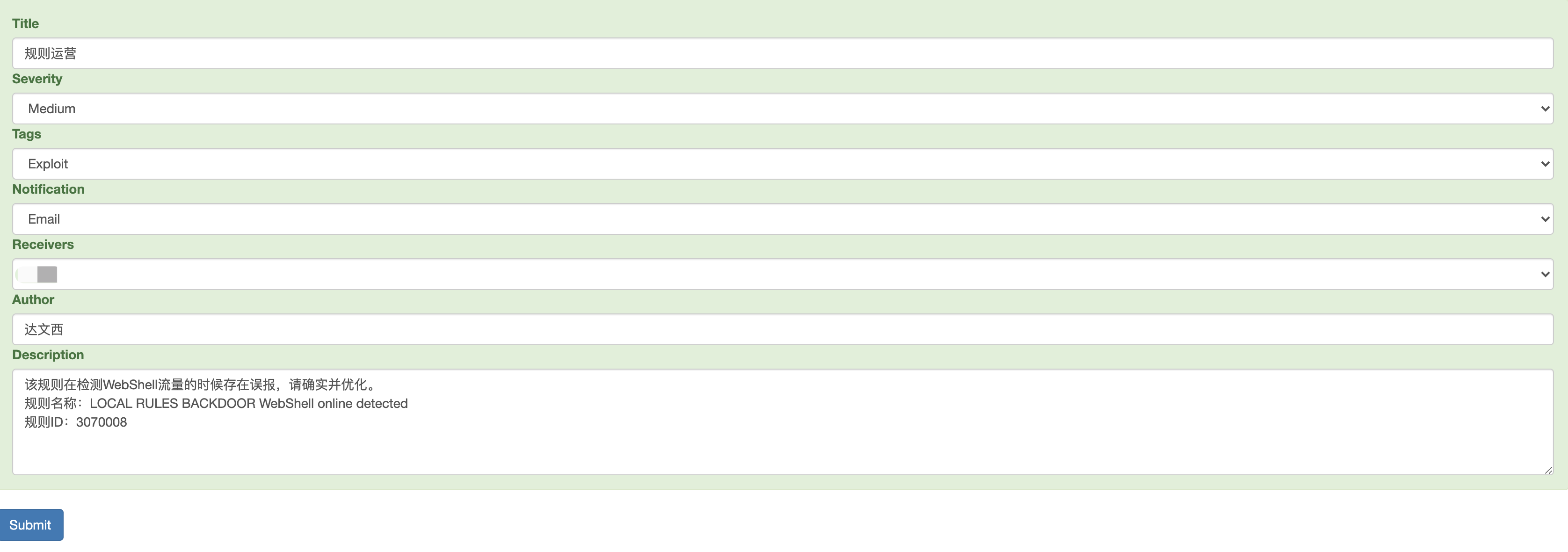

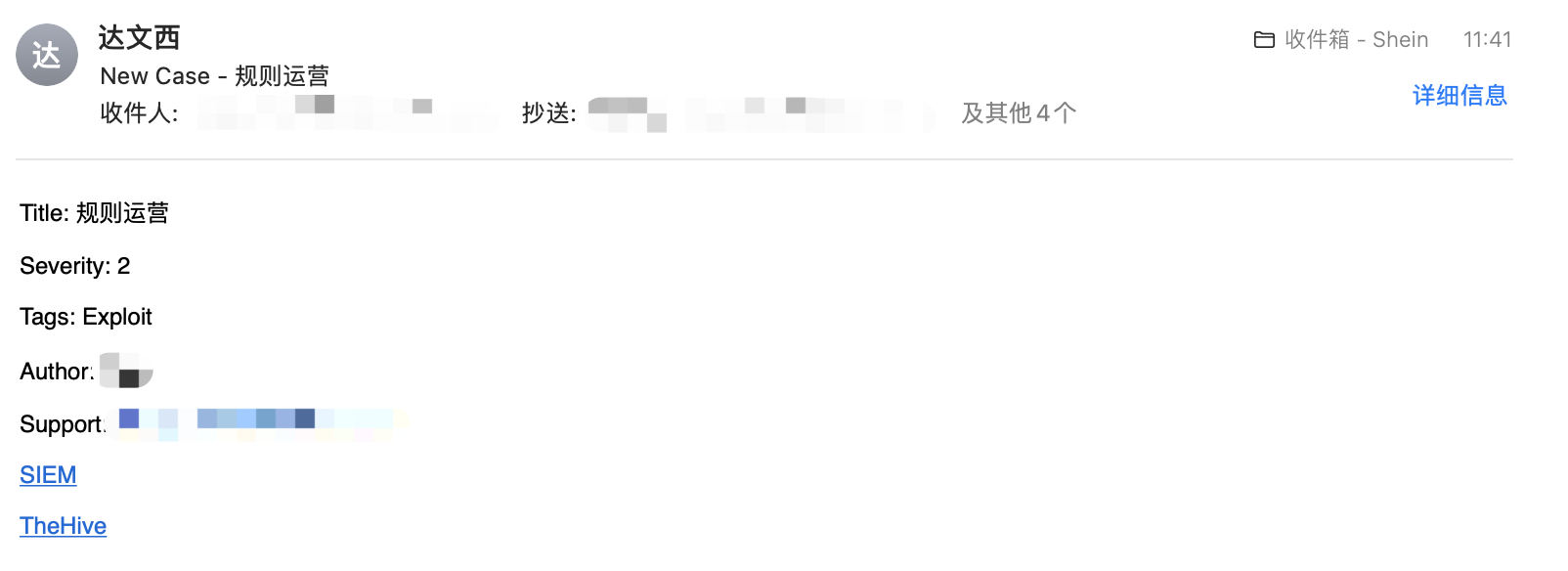

规则运营:SIEM(Alarm、Alert)-> TheHive(Case)

主要是将分析时发现的规则误报以及漏报的情况,通过手动提交Case的形式发送到TheHive上。例如,在SIEM上发现了某个告警存在误报的现象,通过SIEM提交该告警信息给指定负责人,系统会自动将邮件以及Case转到该人员名下。

日常事项:

安全分析周报

写在最后:

如果你有关注过开源解决方案的话,相信你一定有看到过一些TheHive与工作流(**Shuffle、n8n)组件整合的方案。不难看出,TheHive擅长的是事件响应与分析,这是一种半自动化的形式。通过与工作流组件的对接,你会发现这就是一个“散装*”版的SOAR。商业的SOAR相比开源的SOAR多了一个“作战室”的概念,这个功能与TheHive就会有那么一些相似。例如:你可以在作战室中分析某个IP的情报信息,或者联动现有安全设备对某个IoC进行响应的操作。这些功能其实就是对应到了TheHive中的Analyzers与Responders*的功能。

我个人觉得TheHive这种“半自动化”的形式,可以很好的与SOAR进行互补,相信与SOAR对接后会有更多的“价值”被体现出来。例如:在分析任务中可按照场景的不同有选择的调用SOAR的PalyBook,并将响应结果feedback至TheHive中。其实TheHive上还有挺多东西值得说的,一次也写不完。更多东西还需要我们根据实际场景再去挖掘,“思路”很重要!

1 | $ echo 'deb http://download.opensuse.org/repositories/security:/zeek/Debian_10/ /' | sudo tee /etc/apt/sources.list.d/security:zeek.list |

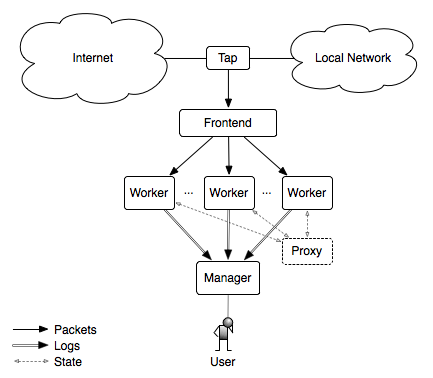

Manager -> Worker

1 | # 安装Zeek 略过 |

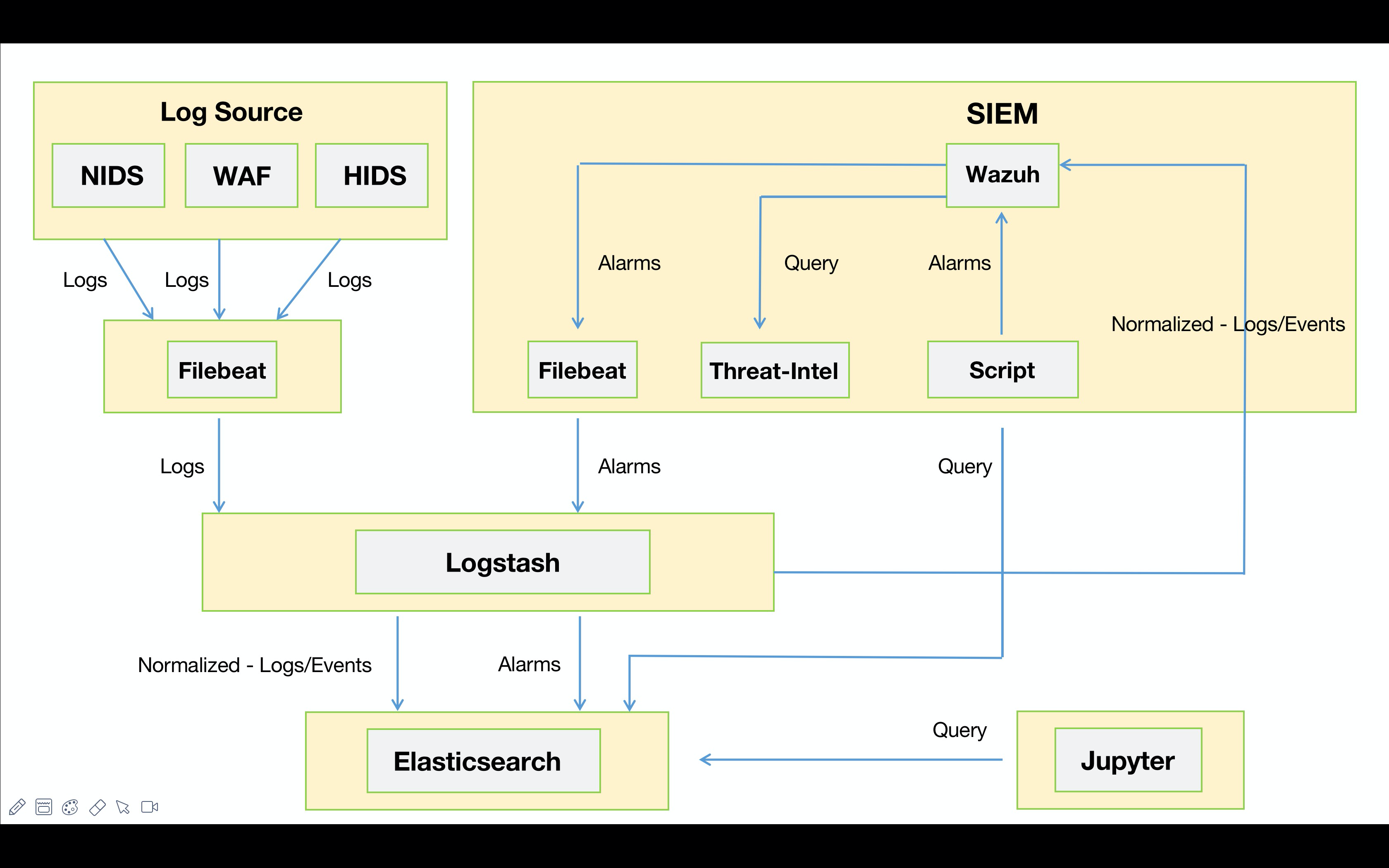

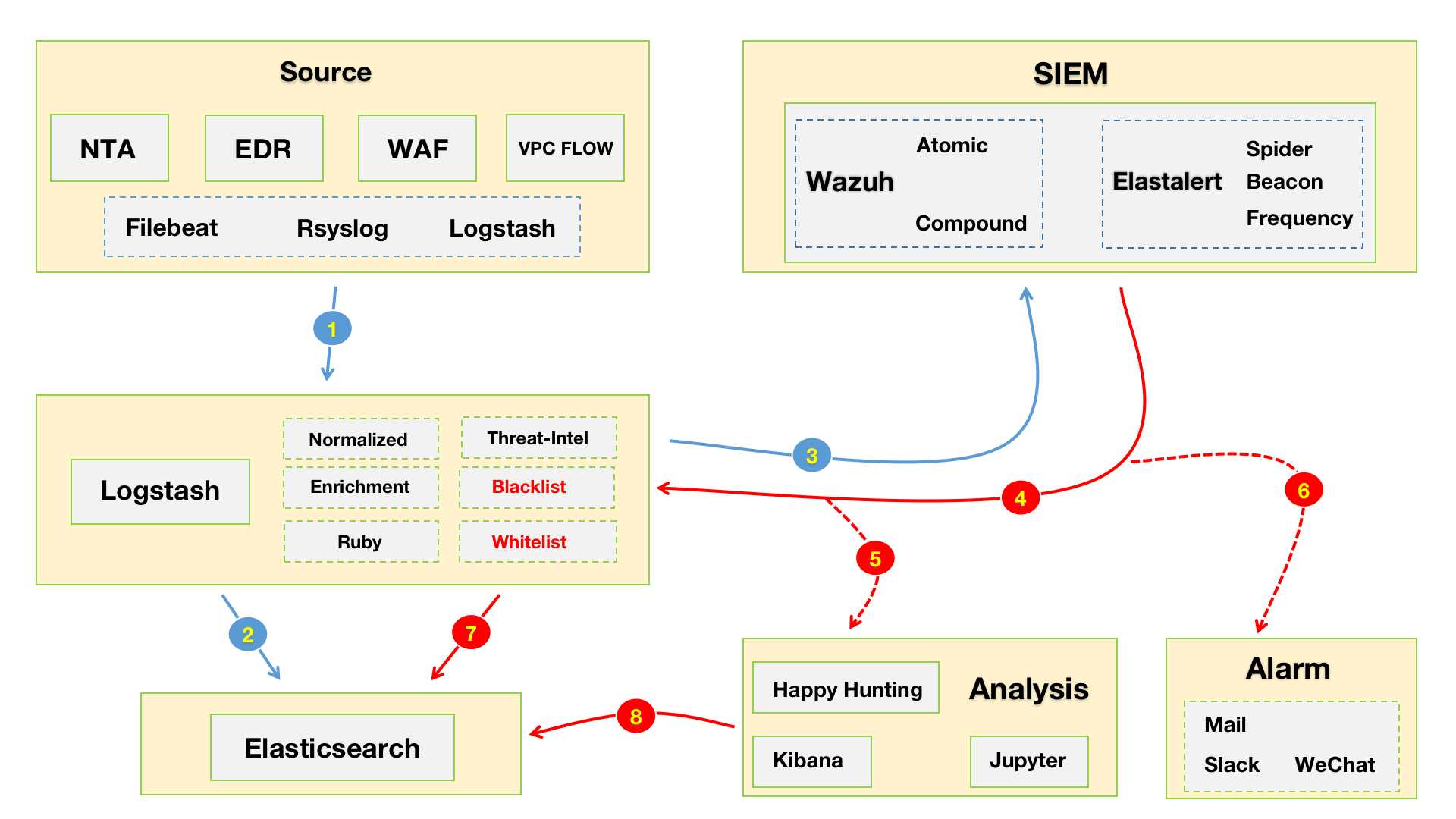

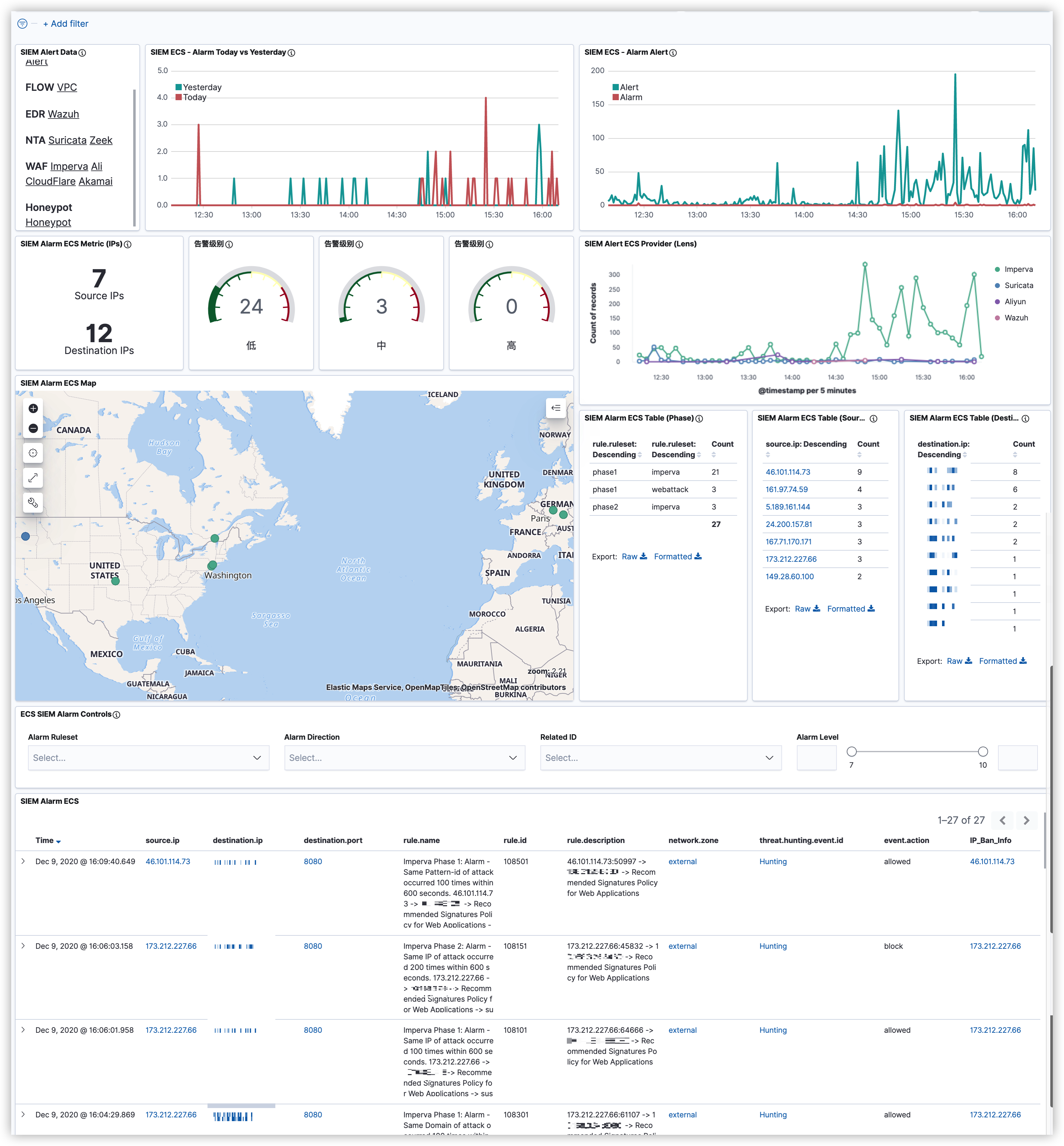

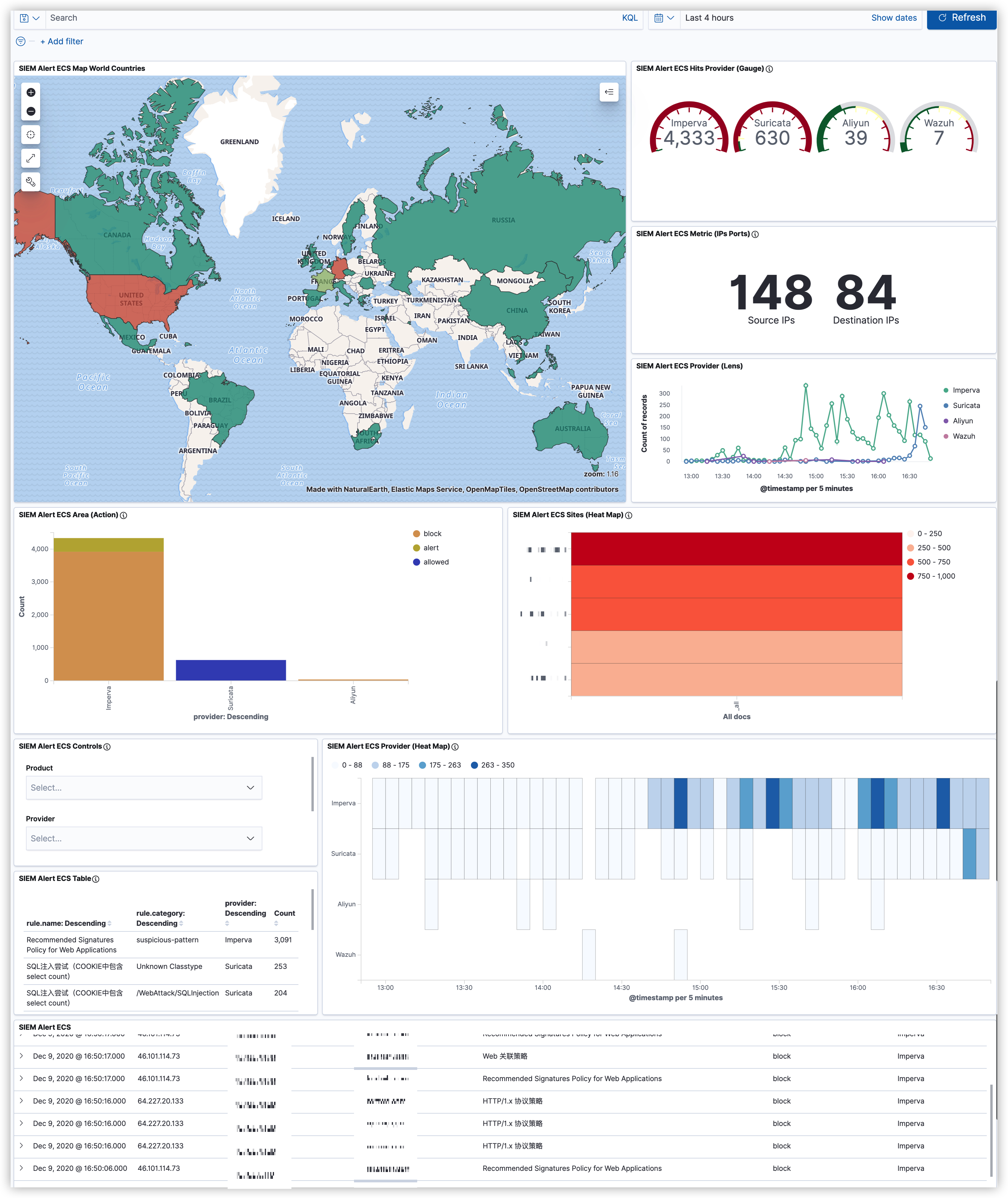

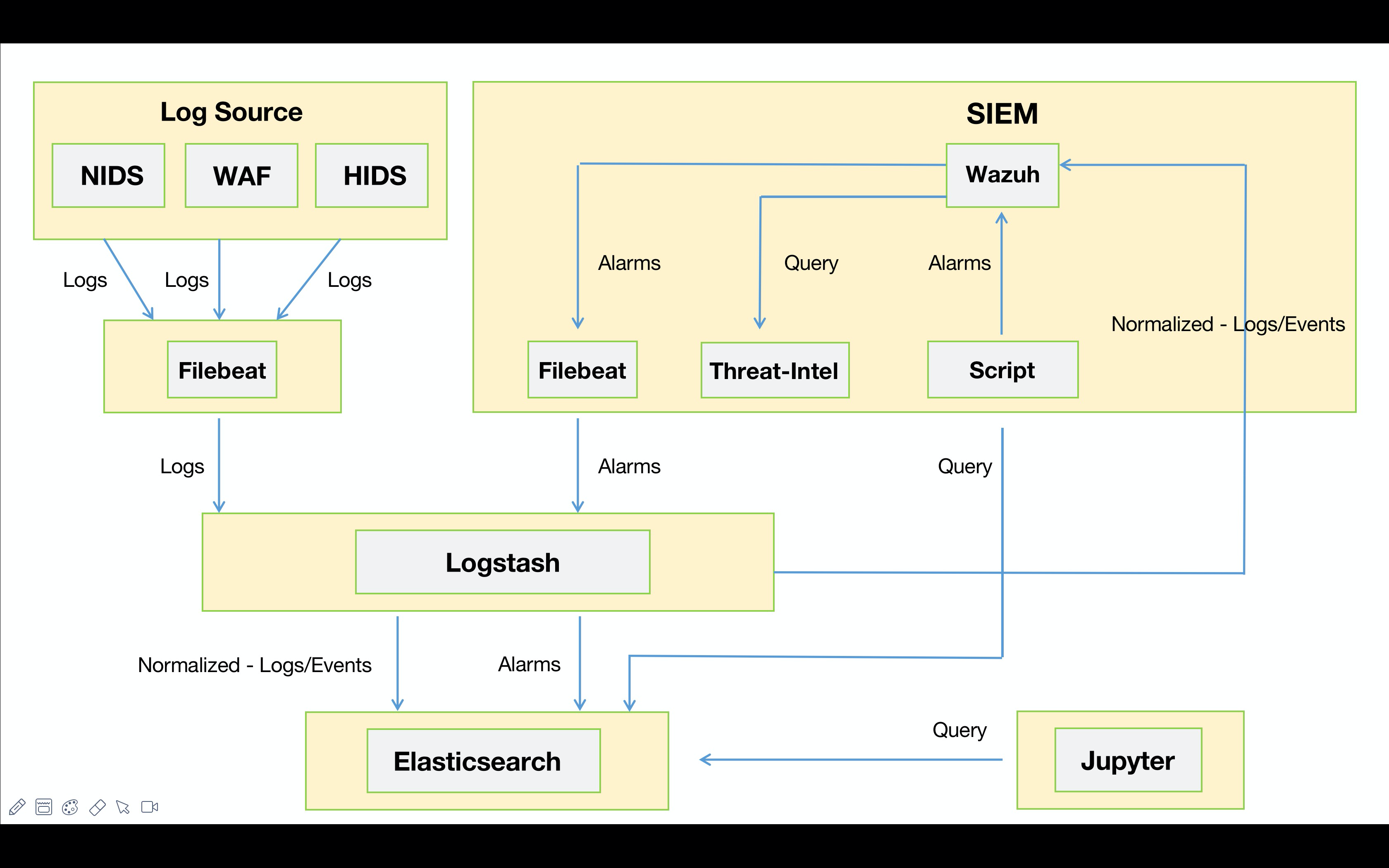

由于工作比较忙,有段时间没有更了,快到年底了,就当是总结了。今年主要精力都是在围绕着安全运营这一块的工作,随着工作的开展发现自己“组装”的SIEM用起来不是很“舒服”。这是我之前写的一篇《Wazuh-如何对异构数据进行关联告警》的文章,当时规划的数据流的Workflow如下图所示。

“散装”SIEM主要是建立在ELK的框架之上,告警模块分别采用了Wazuh与Elastalert。早期为了使Wazuh能够消费异构数据(WAF、NTA、EDR)并进行关联告警,我在数据入库之前利用Logstash对异构数据进行了标准化,同时Wazuh对标准化后的数据进行关联。例如:Suricata通过Filebeat将告警事件发出,由Logstash统一进行标准化处理并同时输出到Elastic以及Wazuh。其中Elastic为告警的元数据,推送到Wazuh上的为标准化后的安全事件。

由于前期的标准化未采用**ECS**(Elastic Common Schema),导致后期沿用Elastic生态的时候出现了使用上的不便。大家都知道ES在7.X的版本推出了SIEM这个功能,如果想使用Elastic SIEM进行分析的话,那么ECS是首选。这里为了后期与开源生态更好的进行融合,需要将原先的标准化转为ECS的格式。

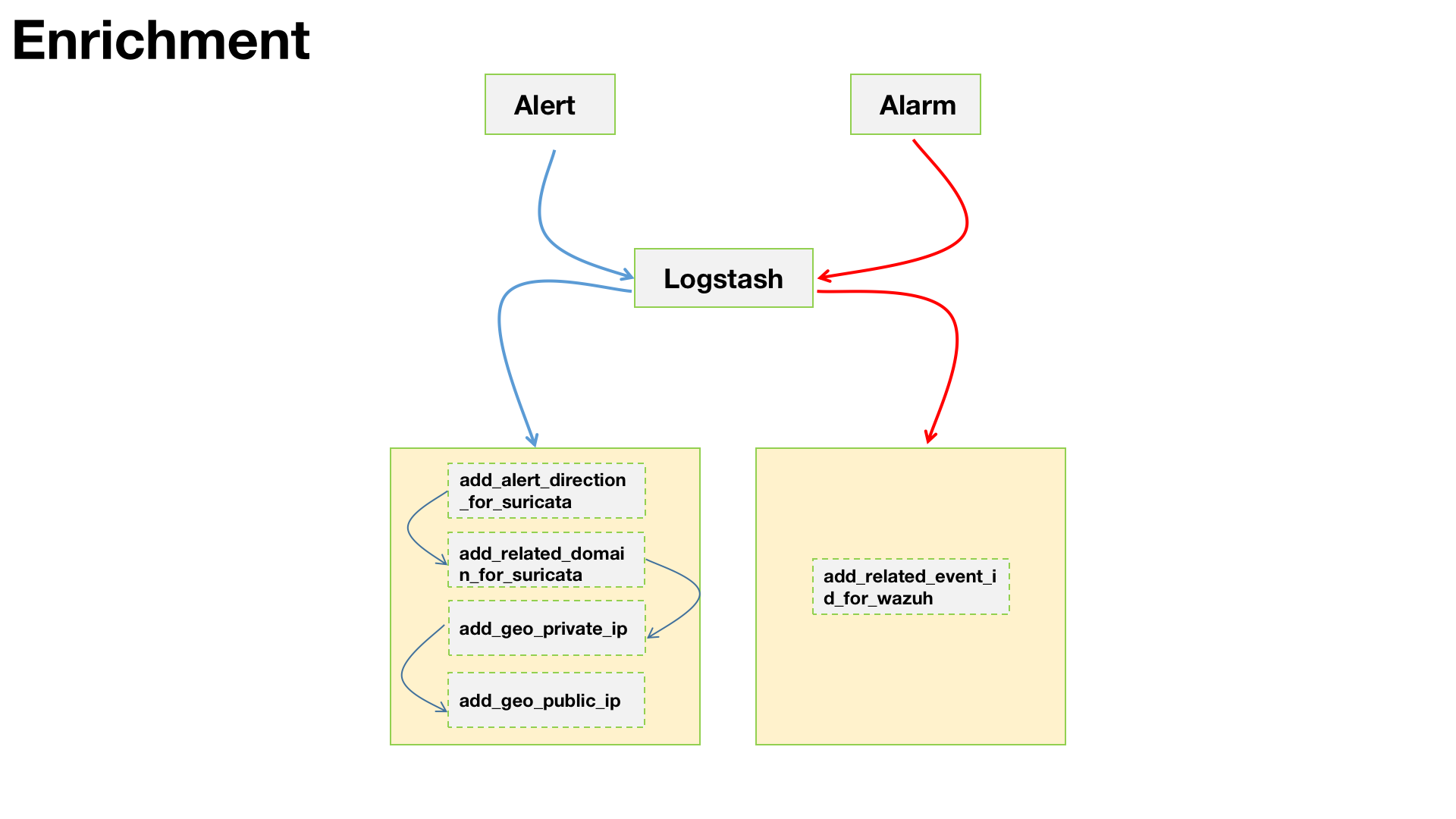

为了更有效的提升告警质量,提高安全分析的效率。需要对入库的安全事件以及产生的告警进行必要的丰富化。

利用CMDB平台的数据对内部资产进行丰富化。例如增加:部门、业务、应用类型、负责人等字段。

对接威胁情报数据,SIEM会对告警事件的攻击IP进行情报侧数据的丰富化;

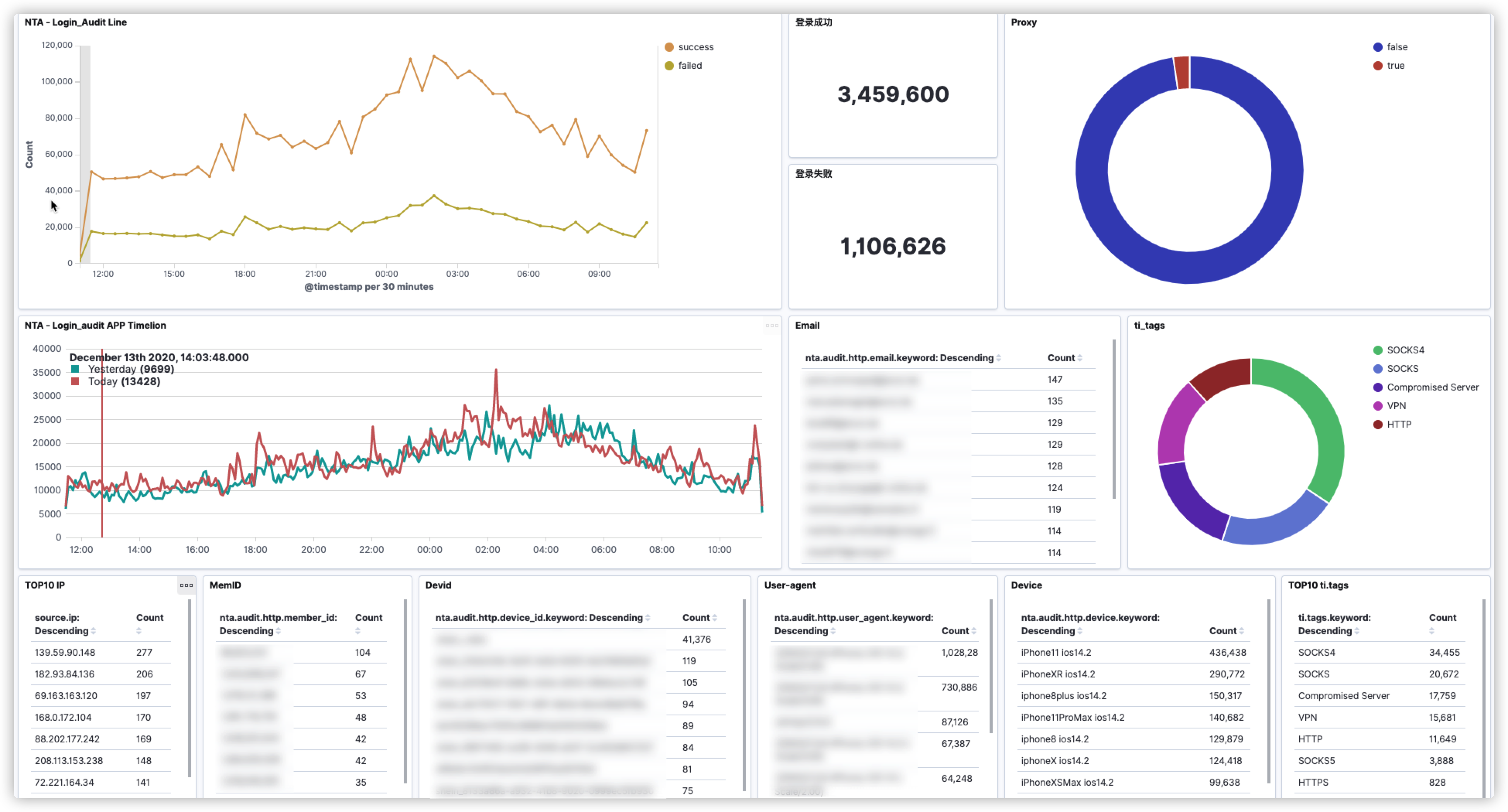

敏感接口监控(如:登录接口、支付接口、钱包接口)。利用第三方数据对IP地址进行Proxy标记,为后期风控的研判提供一些数据支撑;

为安全事件增加方向与区域的字段。便于分析人员第一时间识别内对内以及内对外的告警。也可针对方向进行告警级别的权重调整;

底层安全设备的检测能力与安全事件的可信度,是直接影响SIEM告警的关键因素。

目前SIEM产生的告警,并不会携带原始的安全事件,这对于分析小伙伴来说并不友好,特别是告警量比较多的时候。

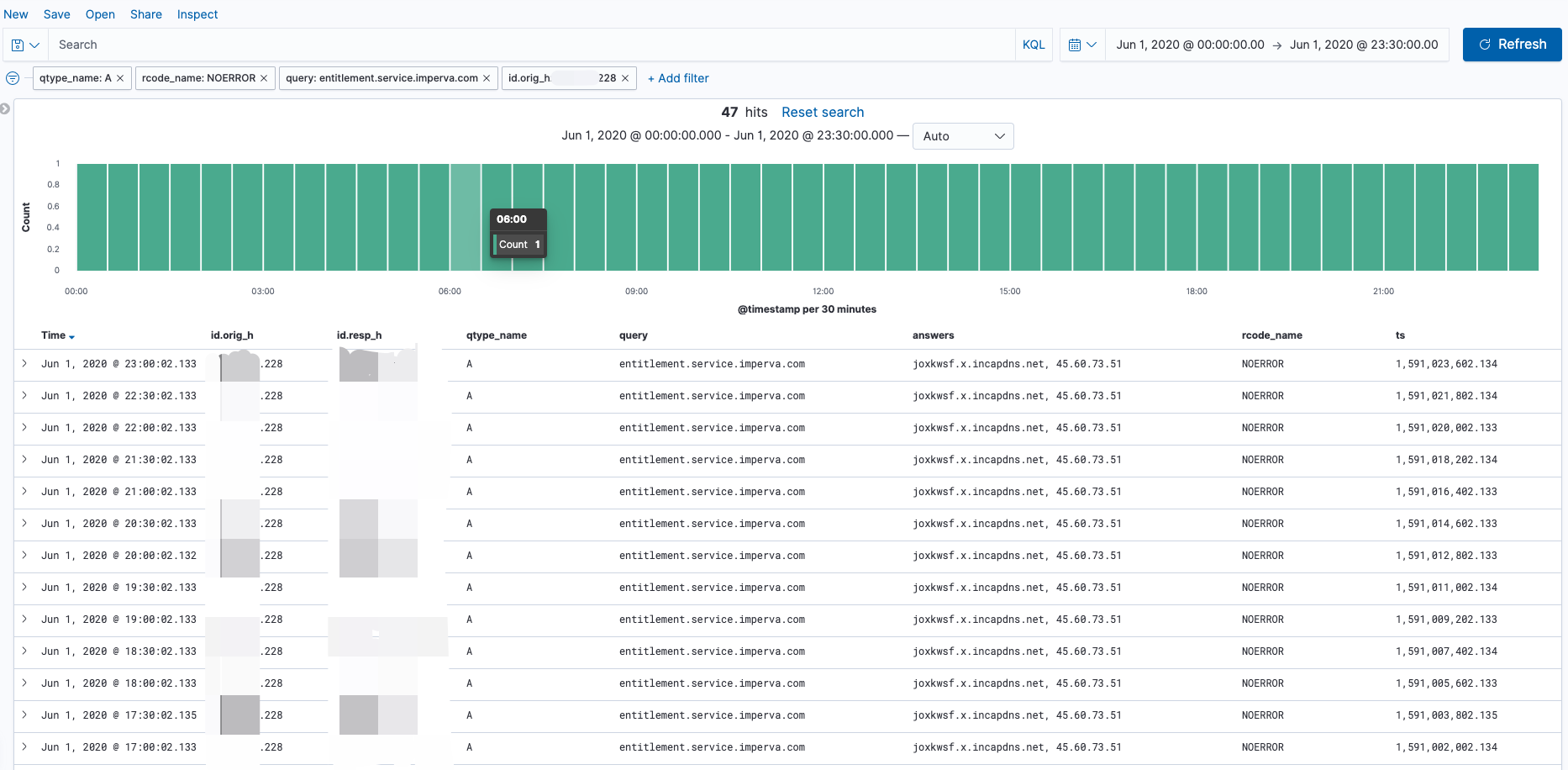

解决了SIEM联动CDN WAF API响应时间过长(15-20分钟 😅)的问题。目前与Imperva WAF API联动已做到了准实时。

优化NTA login_audit代码,提升NTA性能。之前写过一篇文章《**Suricata + Lua实现本地情报对接**》,主要利用了Lua脚本对“敏感”接口进行登录审计并利用情报进行高危账号检测。现已将这部分功能移植到Logstash + Ruby上;

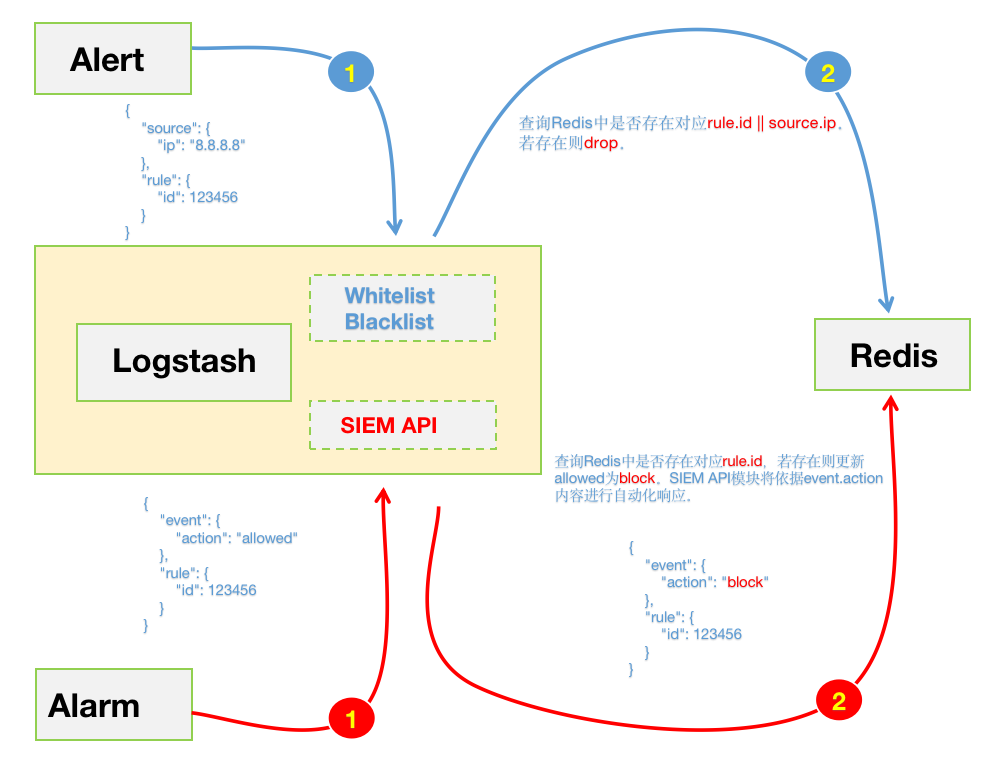

之前的自动化联动规则都是通过手动修改脚本调整rule.id来实现,这种方式在初期还能勉强运行。但在后期运行中暴露出了不足,既不便于管理也增加了维护的成本。所以为了维护SIEM的规则,这里采用了Redis来进行规则的统一管理。后期只需要将需要阻断的rule.id推送至Redis即可。同时也在输出的告警中通过event.action字段标准该告警的响应方式;

这是重新调整之后的“散装”SIEM v0.2的workflow😁。如下图所示,数据源采集端这里就不展开来说了,都是现成的工具发就完事儿了。下面主要说一下Logstash中对数据处理的部分:

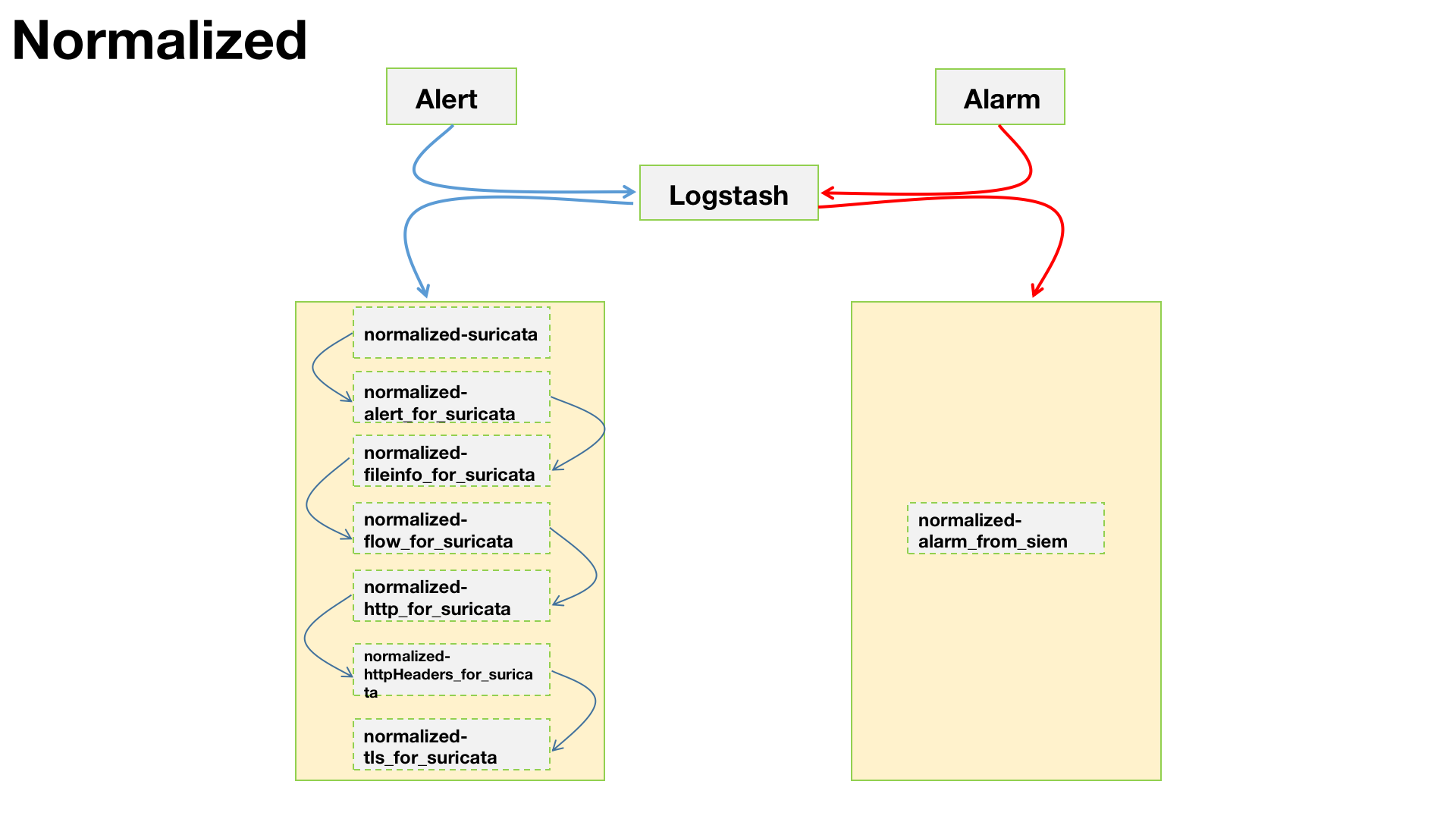

针对alert事件进行标准化,也就是安全设备发出的数据。参考上图中蓝色线所示部分

官方已支持Suricata数据的ECS,我们可直接启用filebeat的Suricata模块。但是,这里需要注意一点,默认Suricata的模块中有一些标准化是交由Elastic来做的。由于我们需要利用Logstash做后续的ETL部分,所以现在的整个数据流是:Filebeat -> Logstash -> Elastic。那么,这一部分的标准化,需要我们在Logstash层面来实现。具体涉及到的配置如下:

通用Suricata事件标准化配置。

provider、product、sensor等字段,为了后期区分不同的数据源类型,(例:NTA、WAF、EDR),以及不同的NTA产品(例:Suricata、Zeek)1 | filter { |

1 | filter { |

1 | filter { |

1 | filter { |

1 | filter { |

当Suricata设置dump-all-headers:both时,会将HTTP头全部输出。对于http_audit这个需求而言是个很好的功能,只不过输出的格式有点坑😂😂😂。为了更方便的在Kibana上进行筛选,我对这部分数据进行了标准化。😁

1 | filter { |

1 | def filter(event) |

1 | { |

1 | { |

1 | filter { |

针对alarm事件进行标准化,也就是SIEM发出的数据。参考上图中红色线所示部分

通用alarm事件标准化配置。

1 | filter { |

针对alert事件进行丰富化,也就是安全设备发出的原始安全事件。参考上图中蓝色线所示部分

由于一些特殊原因,我不得不将suricata.yaml配置文件中的EXTERNAL_NET = any。这也导致了部分Suricata告警的误报,毕竟放开了规则的方向这个收敛条件。所以,我利用了Logstash 在数据入库到SIEM之前做了一层过滤。

1 | filter { |

1 | require "ipaddr" |

为了便于后期做关联分析,增加了攻击者与域名的关联字段。

1 | filter { |

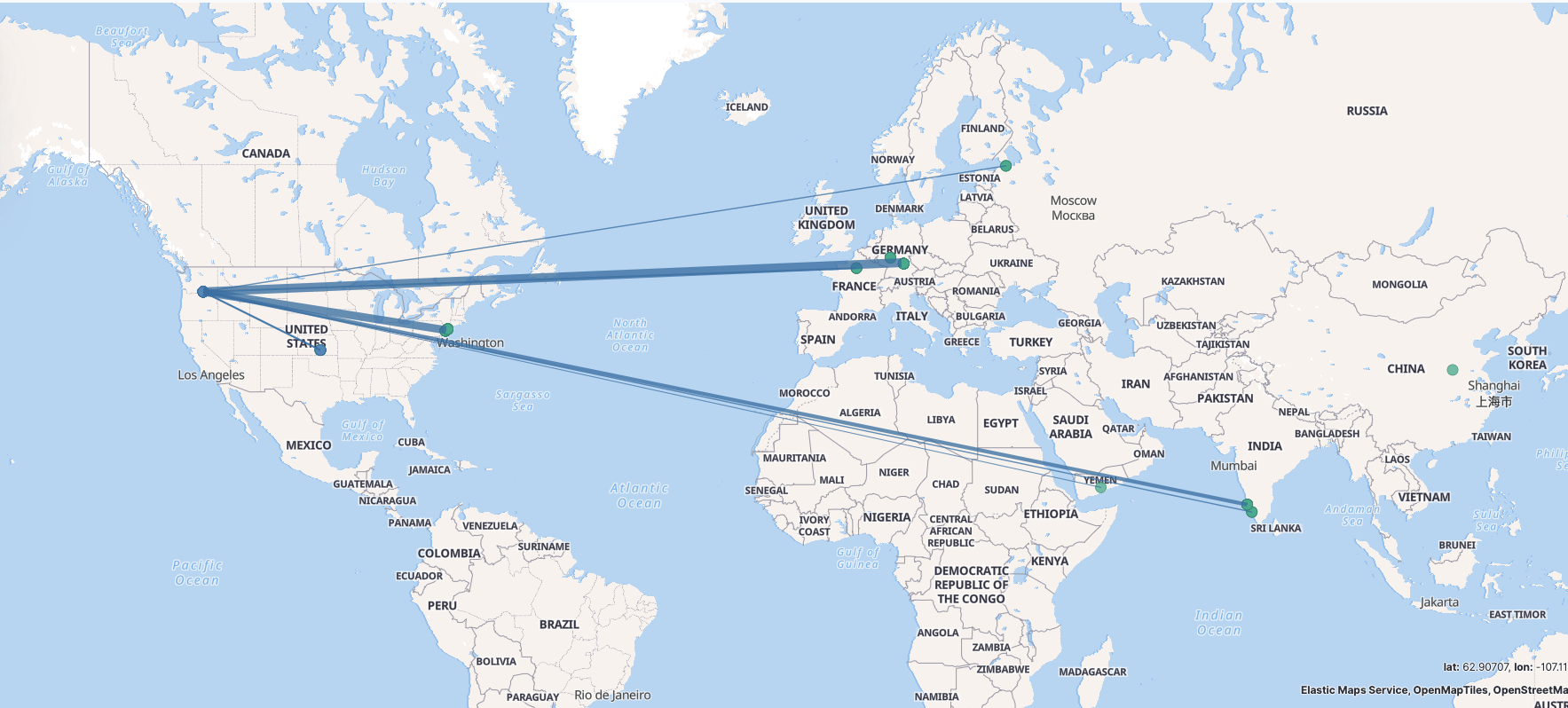

为内网IP增加地理位置标示,主要是为了Dashboard展示的时候可以看到资产在地图上的坐标。地图炮?BIUBIUBIU?😂。由于不是外网IP没办法加载GeoIP进行匹配,这里使用了Translate这个插件来进行配置。

1 | filter { |

1 | '192.168.199.\d+': '{"location":{"lat":45.8491,"lon":-119.7143},"country_name":"China","country_iso_code":"CN","region_name":"Jiangsu","region_iso_code":"JS","city_name":"Nanjing"}' |

1 | '192.168.199.\d+': '{"number":4134,"organization.name":"CHINANET-BACKBONE"}' |

外网IP就比较好搞定了直接加载GeoIP数据即可。

1 | filter { |

针对alarm事件进行丰富化,也就是SIEM发出的数据。上图中红色线所示部分

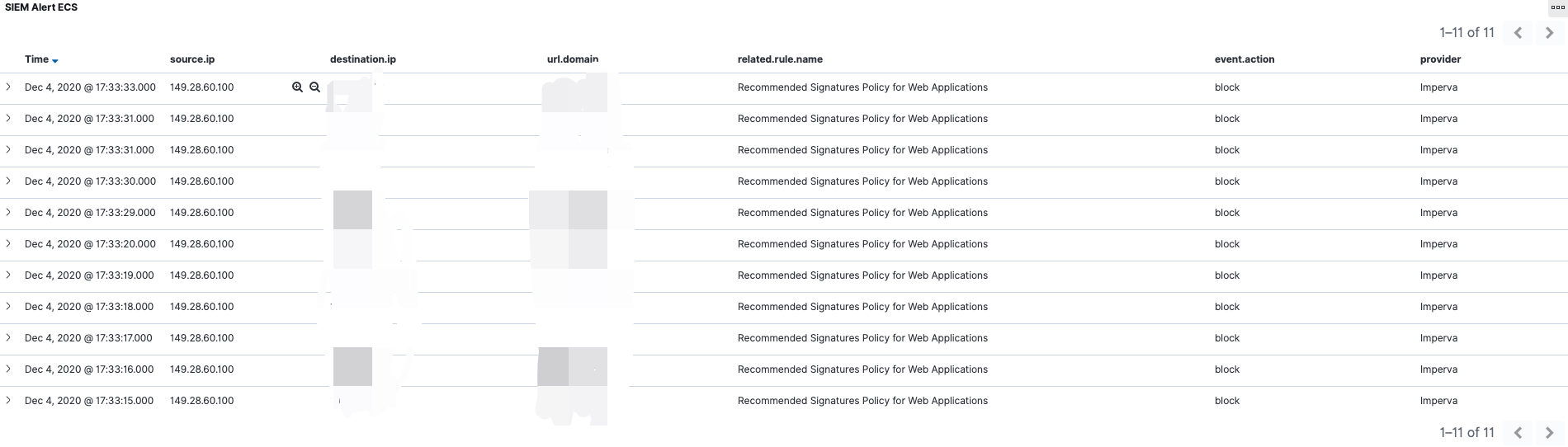

为SIEM告警增加了Hunting的功能,通过该功能可直接溯源到触发alarm的所有alert事件。

1 | filter { |

1 | require "json" |

以下是一个Wazuh聚合告警,分析人员通过点击threat.hunting.event.id字段即可溯源出触发该聚合规则的底层alert事件。

通过Logstash加载Ruby脚本,将IoC推送至Redis。为了避免重复推送,每个IoC都会设置超时时间(默认7天)。如上图中蓝色线所示部分;

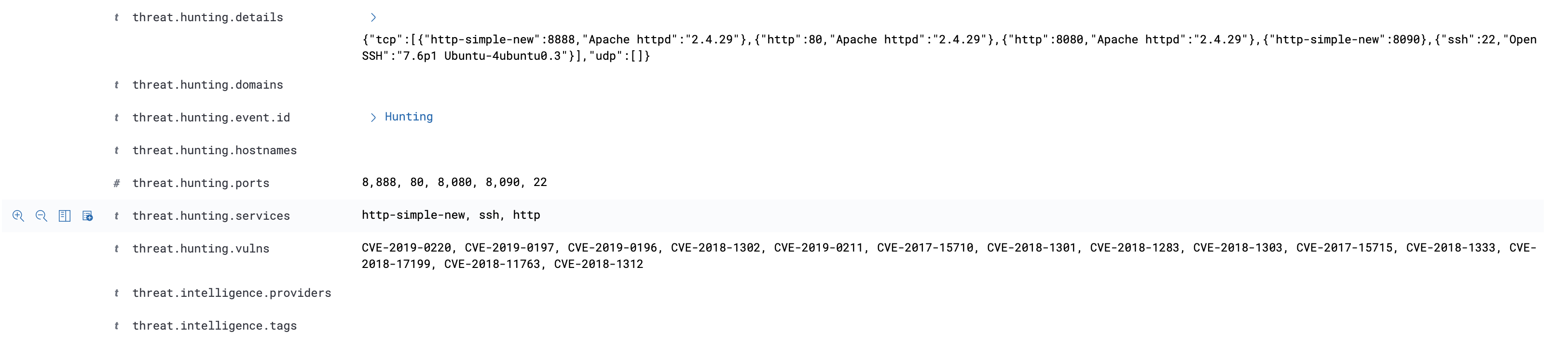

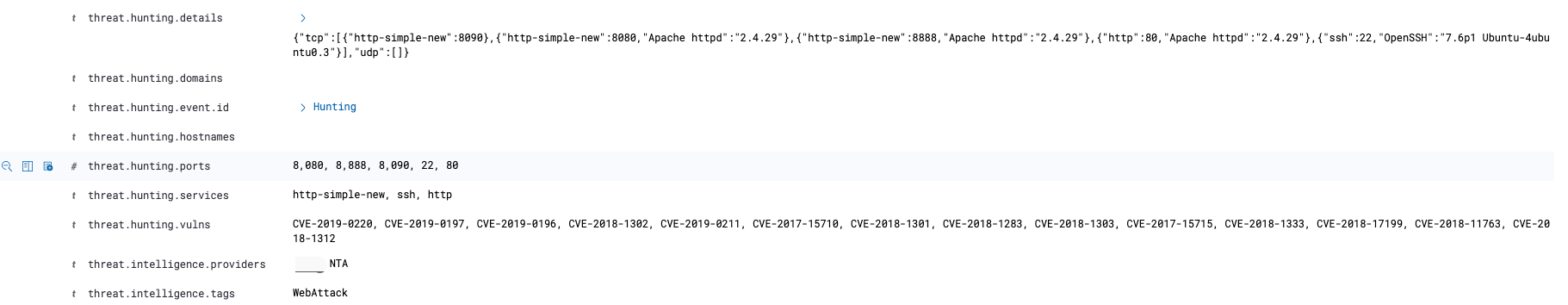

对于攻击过我们的IP地址我们都会利用Shodan进行反向探测,收集一波攻击者(肉鸡)的资产信息,留给后面分析人员用。由于已经设置了IoC超时时间,所以在超时之前IoC不会重复推送。当然,单个Key调用API频率还是要控制一下的,不过你可以选择多个Key哦。你懂的😈😈😈

1 | filter { |

1 | require "json" |

通过Logstash进行威胁情报数据的丰富化。如上图中红色线所示部分;

将Shodan IoC情报数据丰富化至alarm。

1 | filter { |

1 | require "json" |

1 | { |

这部分通常是对接的自有情报(我们会收集攻击过我们的IP,建立适用于自己的内部情报。)以及开源情报。原则上SIEM产生的alarm事件并不会很多,所以这边直接从Elastic获取了情报数据进行告警的丰富化。如果alarm事件很多的话,建议也是放在Redis或者再考虑其他方案。近期准备采购商业情报,后期将会有一个商业情报的对接过程。

1 | filter { |

1 | require 'json' |

1 | { |

实际使用场景中需要对一些白名单IP、特定签名规则进行过滤。这么做也是为了保证SIEM的性能以及告警的可靠性。这部分数据依旧会发送到Elastic作为历史数据留存,但不会被SIEM消费并产生告警。

利用Clone插件,将需要被SIEM消费的数据经由脚本过滤。

1 | filter { |

1 | require "redis" |

1 | filter { |

1 | require "redis" |

更新匹配到的rule.id事件,将event.action值更新为:block。便于后期被SIEM联动模块“消费”。如上图中红色线所示部分。主要是为了通过event.action字段来区分SIEM做的自动化操作。

针对指定rule.id事件,将allowed值更新为:block。便于后期被联动模块“消费”。

1 | filter { |

1 | require "redis" |

XXX

XXX

XXX

| No | File | Script | Log | Note |

|---|---|---|---|---|

| 1 | 60_normalized-general.conf | |||

| 2 | 61_normalized-alert.conf | |||

| 3 | 62_normalized-flow.conf | |||

| 4 | 63_normalized-fileinfo.conf | |||

| 5 | 64_normalized-http.conf | 64_normalized-http.rb | 64_normalized-http.log | |

| 6 | 65_normalized-tls.conf |

| No | File | Script | Log | Note |

|---|---|---|---|---|

| 1 | 70_enrichment-general-geo-1-private_ip.conf | |||

| 2 | 70_enrichment-general-geo-2-public_ip.conf | |||

| 3 | 71_enrichment-alert-1-direction.conf | 71_enrichment-alert-1-direction.rb | ||

| 4 | 71_enrichment-alert-2-killChain.conf | 71_enrichment-alert-2-killChain.rb | 71_enrichment-alert-2-killChain.log | |

| 5 | 71_enrichment-alert-3-cve.conf | 71_enrichment-alert-3-cve.rb | 71_enrichment-alert-3-cve.log | |

| 6 | 71_enrichment-alert-4-whitelist_ip.conf | 71_enrichment-alert-4-whitelist_ip.rb | 71_enrichment-alert-4-whitelist_ip.log | **** |

Imput

1 | # key: str killchain:{provider}:{rule id} |

Output

1 | { |

Input

1 | # key: str enrichment:{class}:{cve} |

Output

1 | { |

| No | File | Script | Log | Note |

|---|---|---|---|---|

| 1 | 85_threatintel-siem-event-1-shodan.conf | 85_threatintel-siem-event-1-shodan.rb |

Input

1 | # key: list spider:{provider}:ioc |

Output

1 | { |

Input

1 | # key: str whitelist:{class}:{ip} |

Output

1 | { |

Differences

1 | { |

1 | { |

Input

1 | # key: str whitelist:{class}:{provider}:{rule id} |

Workflow

Input

1 | # key: str whitelist:{class}:{provider}:{rule name} |

Workflow

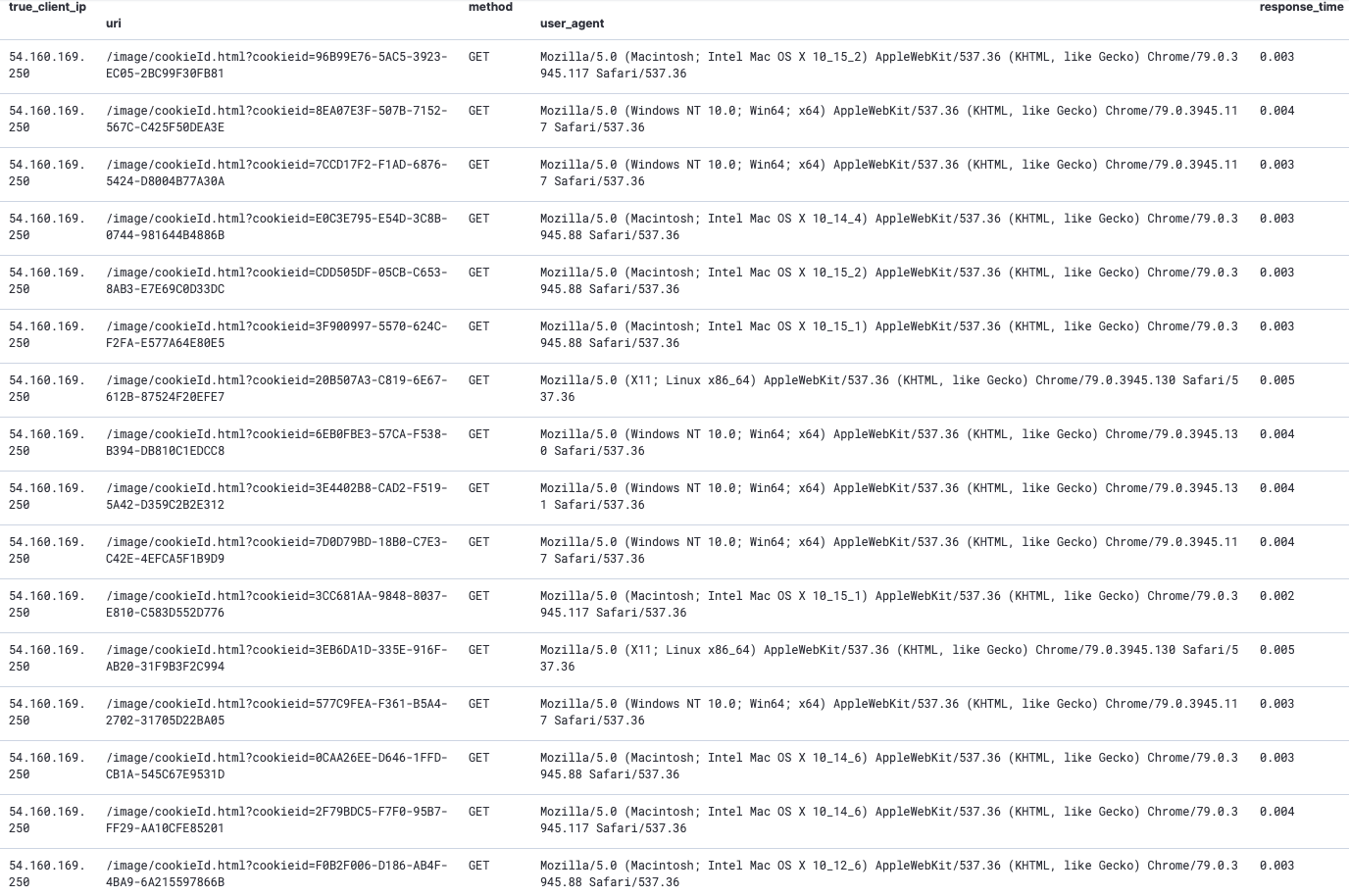

由于AWS流量镜像的特殊性,现阶段生产网的架构中只接入了HTTP与DNS流量,分别采用了Zeek与Suricata对现有流量进行分析与预警。Suricata负责基于签名的特征检测,Zeek负责定制化事件的脚本检测,也算是“各司其职”。近几日,某个业务接口出现了Pindom告警,经过分析发现部分IP尝试对该接口的参数进行遍历。由于遍历参数对应的值设置的都比较大,且后台并未对该参数进行深度的限制,导致了服务器会不断的进行计算,最终导致接口无响应。

通过扩展**ElastAlert**告警框架的告警模型,来实现以上需求。

1 | import sys |

1 | name: "Detection of Spider Crawlers" |

1 | { |

find_spider: 用于检测参数遍历的行为,这里加上find_beacon是为了增加一个周期性的检测维度。当然很多爬虫都会「自带」时间抖动,以及使用爬虫池,所以效果并不是特别明显。

find_beacon:更适用于检测C2连接,例如针对DNS域名的请求这种情况,这里有一个检测到的域名周期性请求的告警:

1 | { |

1小时内,IP: 54.160.169.250 总共访问了该接口164次且cookieid参数更换了133次,占到总请求量的81%。并更换了47个不同的user_agent。

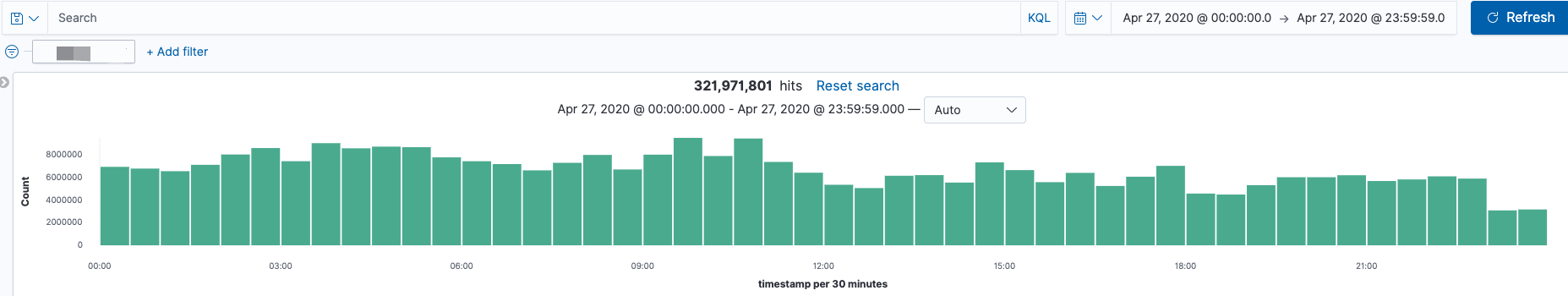

本地环境中部署了2台NTA(Suricata)接收内网12台DNS服务器的流量,用于发现DNS请求中存在的安全问题。近一段时间发现2台NTA服务器运行10小时左右就会自动重启Suricata进程,看了一下日志大概意思是说内存不足,需要强制重启释放内存。说起这个问题当时也是花了一些时间去定位。首先DNS这种小包在我这平均流量也就25Mbps,所以大概率不是因为网卡流量过大而导致的。继续定位,由于我们这各个应用服务器会通过内网域名的形式进行接口调用,所以DNS请求量很大。Kibana上看了一下目前dns_type: query事件的数据量320,000,000/天 ~ 350,000,000/天(这仅仅是dns_type: query数据量,dns_type: answer 数据量也超级大)。由于Suricata不能在数据输出之前对指定域名进行过滤,这一点确实很傻,必须吐槽。当时的规避做法就是只保留dns_type: query事件,既保证了Suricata的正常运行也暂时满足了需求。

近一段时间网站的某个上传接口被上传了包含恶意Payload的jpg与png。虽然Suricata有检测到,但也延伸了新的需求,如何判断文件是否上传成功以及文件还原与提取HASH。虽然这两点Suricata自身都可以做,但是有一个弊端不得不说。例如Suricata只要开启file_info就会对所有支持文件还原的协议进行HASH提取。由于我们是电商,外部访问的数据量会很大,Suricata默认不支持过滤,针对用户访问的HTML网页这种也会被计算一个HASH,这个量就非常的恐怖了。

总结:针对以上2个问题,我需要的是一个更加灵活的NTA框架,下面请来本次主角 - Zeek。

dns-filter_external.zeek

1 | redef Site::local_zones = {"canon88.github.io", "baidu.com", "google.com"}; |

通过Site::local_zones定义一个内部的域名,这些域名默认都是我们需要过滤掉的。例如,在我的需求中,多为内网的域名;

Analyzer::enable_analyzer(Analyzer::ANALYZER_DNS);指定了只对DNS流量进行分析;1 | { |

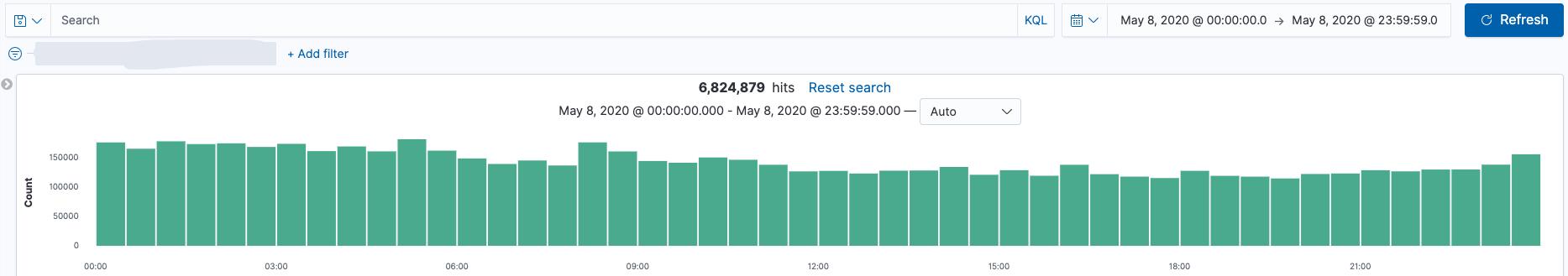

当采用Zeek过滤了DNS请求后,现在每天的DNS数据量 6,300,000/天 ~ 6,800,000/天(query + answer),对比之前的数据量320,000,000/天 ~ 350,000,000/天(query)。数据量减少很明显,同时也减少了后端ES存储的压力。

file_extraction.zeek

Demo脚本,语法并不是很优雅,切勿纠结。

1 | @load base/frameworks/files/main |

1. 支持针对指定hostname,method,url,文件头进行hash的提取以及文件还原;

2. 默认文件还原按照年月日进行数据的存储,保存名字按照MD5名称命名;

3. 丰富化了文件还原的日志,增加HTTP相关字段;

1 | { |

这是其中一个包含恶意Payload还原出的图片样例

1 | $ ll /data/logs/zeek/extracted_files/ |

通过Zeek脚本扩展后,可以“随意所欲”的获取各种类型文件的Hash以及定制化的进行文件还原。

当获取文件上传的Hash之后,可以尝试扩展出以下2个安全事件:

判断文件是否上传成功。

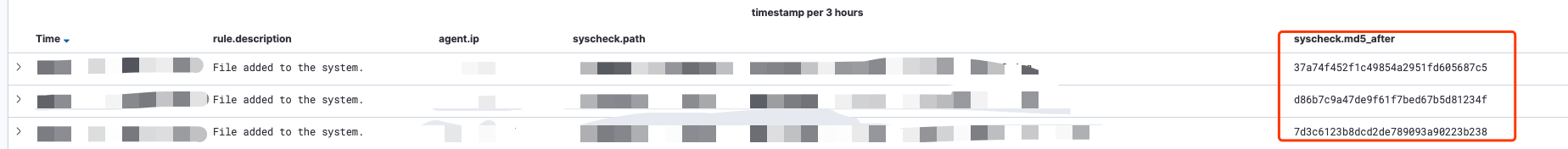

通常第一时间会需要定位文件是否上传成功,若上传成功需要进行相关的事件输出,这个时候我们可以通过采用HIDS进行文件落地事件的关联。

关联杀毒引擎/威胁情报。

将第一个关联好的事件进行Hash的碰撞,最常见的是将HASH送到VT或威胁情报。

这里以Wazuh事件为例,将Zeek的文件还原事件与Wazuh的新增文件事件进行关联,关联指标采用Hash。

a. Zeek事件

1 | { |

b. Wazuh 事件

1 | from elasticsearch import Elasticsearch |

1 | import datetime |

1 | date_list |

1 | chunk_size = 10000 |

写在前面

这并不是什么高精尖的架构与技术。这只是我个人在工作中结合目前手头的资源进行了一些整合 。当然实现这些需求的方法有很多, 有钱的可以考虑Splunk, 没钱的有研发的团队的可以上Flink、Esper 。

由于攻防对抗的升级, 通过单一的数据源很难直接断言攻击是否成功。因此, 我们需要结合多个数据源进行安全事件的关联, 从中提炼出可靠性较高的安全告警进行人工排查。例如: 针对WebShell上传类的, 可以通过网络流量 + 终端进行关联; 针对Web攻击类, 可以通过WAF + NIDS的事件关联, 得到Bypass WAF 的安全告警。

虽然Wazuh本身具备安全事件的关联能力, 但在传统的部署架构中, 通常是由Wazuh Agent将安全事件发送到Wazuh Manager,通过Manager进行安全事件的关联告警。由于缺少了对数据进行ETL, 使得Wazuh Manager很难对异构数据进行关联。因此, 我们需要通过Logstash实现对数据的标准化, 并将标准化后的数据通过Syslog的形式发送到Wazuh Manager, 从而进行异构数据的关联。

syscheck告警事件, 默认不会携带srcip的字段, 对于此问题可以通过在Manager上编写一个预处理脚本来解决。Suricata (Wazuh agent) —(Agent: UDP 1514)—> Wazuh Manager

所有的标准化都由Logstash来进行, Filebeat只需要做’无脑‘转发即可。

workflow:

1 | #=========================== Filebeat inputs ============================= |

1 | input { |

1 | filter { |

1 | { |

1 | filter { |

1 | output { |

1 | output { |

1 | import json |

1 | <ossec_config> |

Sample

1 | <!-- |

1 | <group name="suricata,"> |

1 | <group name="syscheck,"> |

1 | <group name="local,composite,"> |

对于WebShell关联检测,目前采用的是同源IP以及时序的关联, 最为靠谱的应该是通过Hash的比对。这里要吐槽一下Suricata默认的fileinfo, 没办法自定义输出, 只要开启可被还原的协议都会输出fileinfo的事件。正因如此, 数据量一大Wazuh的引擎压力会很大。我尝试通过Lua来自定义一个文件审计类的事件, 貌似也同样没办法区分协议更别说针对http过滤条件进行自定义的过滤输出了。

由于关联规则本身是通过底层多个安全事件进行维度的关联提升告警的可靠性。因此, 底层安全事件不够准确同样会让上层的关联规则带来大量误报。对于底层安全事件的优化也是需要持续进行的。

Wazuh v3.11.4 采用syslog接收大日志时, 会触发memory violation导致ossec-remoted进程重启, 该问题已向社区反馈下个版本中会解决。

目前平台接入了Suricata的告警规则, 由于镜像源的关系部分规则产生了**’误’**告警, 因此需要针对这部分规则进行IP地址的过滤。

Each key must be unique and is terminated with a colon

:.For IP addresses the dot notation is used for subnet matches:

| key | CIDR | Possible matches |

|---|---|---|

| 192.168.: | 192.168.0.0/16 | 192.168.0.0 - 192.168.255.255 |

| 172.16.19.: | 172.16.19.0/24 | 172.16.19.0 - 172.16.19.255 |

| 10.1.1.1: | 10.1.1.1/32 | 10.1.1.1 |

1 | $ vim /var/ossec/etc/lists/private_ip |

Since Wazuh v3.11.3, CDB lists are built and loaded automatically when the analysis engine is started. Therefore, when adding or modifying CDB lists, it is no longer needed to run

ossec-makelists, just restart the manager.

从Wazuh v3.11.3开始,将在启动分析引擎时自动构建和加载CDB列表。因此,添加或修改CDB列表时,不再需要运行ossec-makelists,只需重新启动管理器即可。

3.11.3 之前版本需要执行

1 | $ /var/ossec/bin/ossec-makelists |

1 | $ vim /var/ossec/etc/ossec.conf |

1 | $ systemctl restart wazuh-manager |

1 | <var name="SAME_IP_TIME">120</var> |

1 | $ /var/ossec/bin/ossec-logtest |